I was also puzzled by the keys, queries, and values in the attention mechanisms for a while. After searching on the Web and digesting relevant information, I have a clear picture about how the keys, queries, and values work and why they would work!

Let's see how they work, followed by why they work.

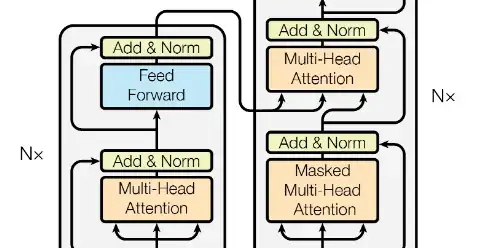

In a seq2seq model, we encode the input sequence to a context vector, and then feed this context vector to the decoder to yield expected good output.

However, if the input sequence is long, relying on only one context vector become less effective. We need all the information from the hidden states in the input sequence (encoder) for better decoding (the attention mechanism).

One way to utilize the input hidden states is shown below:

Image source: https://towardsdatascience.com/attn-illustrated-attention-5ec4ad276ee3

Image source: https://towardsdatascience.com/attn-illustrated-attention-5ec4ad276ee3

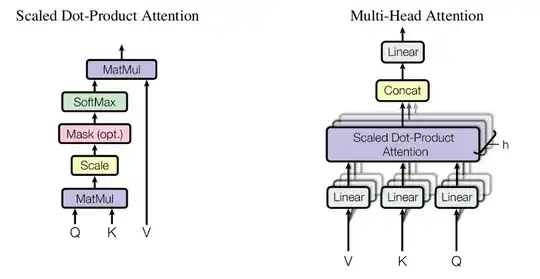

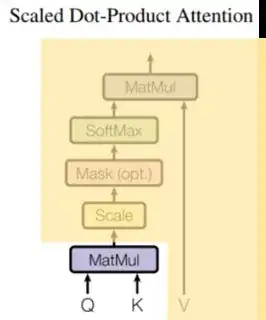

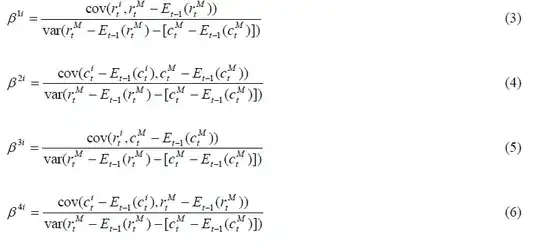

In other words, in this attention mechanism, the context vector is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key (this is a slightly modified sentence from [Attention Is All You Need] https://arxiv.org/pdf/1706.03762.pdf).

Here, the query is from the decoder hidden state, the key and value are from the encoder hidden states (key and value are the same in this figure). The score is the compatibility between the query and key, which can be a dot product between the query and key (or other form of compatibility). The scores then go through the softmax function to yield a set of weights whose sum equals 1. Each weight multiplies its corresponding values to yield the context vector which utilizes all the input hidden states.

Note that if we manually set the weight of the last input to 1 and all its precedences to 0s, we reduce the attention mechanism to the original seq2seq context vector mechanism. That is, there is no attention to the earlier input encoder states.

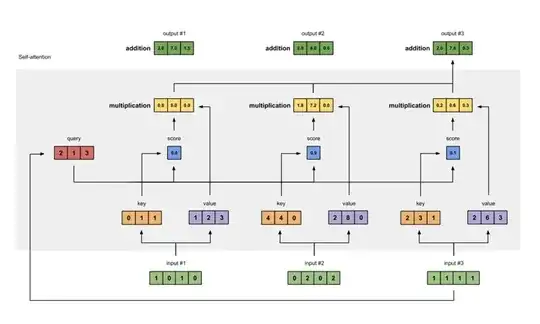

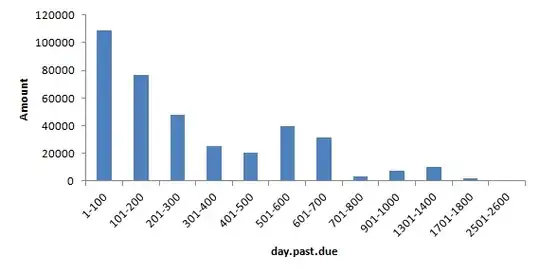

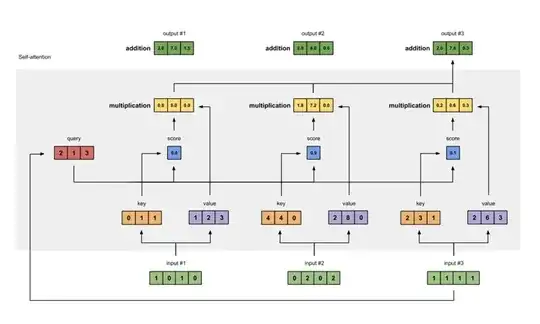

Now, let's consider the self-attention mechanism as shown in the figure below:

Image source: https://towardsdatascience.com/illustrated-self-attention-2d627e33b20a

Image source: https://towardsdatascience.com/illustrated-self-attention-2d627e33b20a

The difference from the above figure is that the queries, keys, and values are transformations of the corresponding input state vectors. The others remain the same.

Note that we could still use the original encoder state vectors as the queries, keys, and values. So, why we need the transformation? The transformation is simply a matrix multiplication like this:

Query = I x W(Q)

Key = I x W(K)

Value = I x W(V)

where I is the input (encoder) state vector, and W(Q), W(K), and W(V) are the corresponding matrices to transform the I vector into the Query, Key, Value vectors.

What are the benefits of this matrix multiplication (vector transformation)?

The obvious reason is that if we do not transform the input vectors, the dot product for computing the weight for each input's value will always yield a maximum weight score for the individual input token itself. This may not be the desired case, say, for the pronoun token that we need it to attend to its referent.

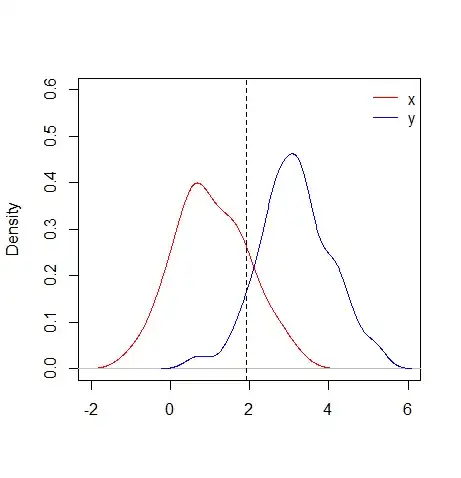

Another less obvious but important reason is that the transformation may yield better representations for Query, Key, and Value. Recall the effect of Singular Value Decomposition (SVD) like that in the following figure:

Image source: https://youtu.be/K38wVcdNuFc?t=10

By multiplying an input vector with a matrix V (from the SVD), we obtain a better representation for computing the compatibility between two vectors, if these two vectors are similar in the topic space as shown in the example in the figure.

And these matrices for transformation can be learned in a neural network!

In short, by multiplying the input vector with a matrix, we got:

increase of the possibility for each input token to attend to other tokens in the input sequence, instead of individual token itself.

possibly better (latent) representations of the input vector;

conversion of the input vector into a space with a desired dimension, say, from dimension 5 to 2, or from n to m, etc (which is practically useful);

Note the transformation matrix is learnable (without manual setting).

I hope this help you understand the queries, keys, and values in the (self-)attention mechanism of deep neural networks.