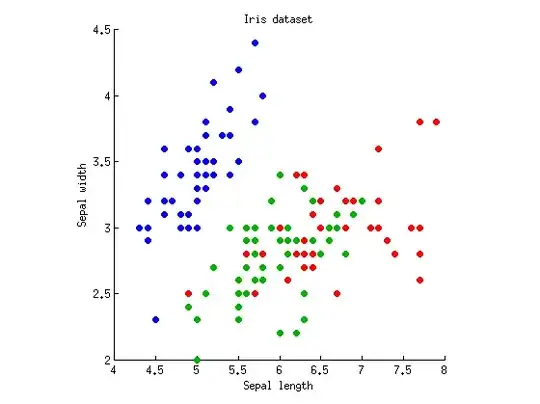

First, let's get into what skewed means versus uniform.

Here is an unskewed distribution that is not uniform. This is the standard normal bell curve.

plot(seq(-3,3,0.01),dnorm(seq(-3,3,0.01),0,1),type='l',xlab='',ylab='')

Here is a skewed distribution ($F_{5,5}$).

plot(seq(0,4,0.01),df(seq(0,4,0.01),5,5),type='l',xlab='',ylab='')

However, both distributions have values that they prefer. In the normal distribution, for instance, you would expect to get samples around 0 more than you would expect values around 2. Therefore, the distributions are nor uniform. A uniform distribution would be something like how a die has a 1/6 chance of landing on each number.

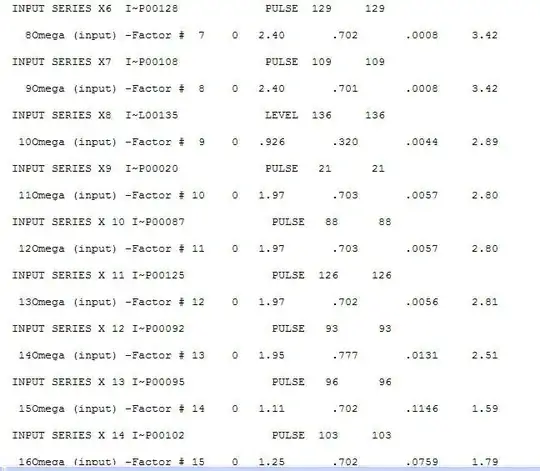

I see your problem as being akin to checking if a die is biased towards particular numbers. In your first example, ecah number between 1 and 10 is equally represented. You have a uniform distribution on $\{1,2,3,4,5,6,7,8,9,10\}$.

$$P(X = 1) = P(X=2) = \cdots = P(X=9) = P(X=10) = \frac{1}{10}$$

In your second example, you have some preference for 1 and 2 at the expense of 3.

$$P(X=1) = P(X=2) = \frac{4/10}, P(X=3) = \frac{2/10}

Number of unique items has nothing to do with the uniformity.

What I think you want to do is test if your sample indicates a preference for particular numbers. If you roll a die 12 times and get $\{3,2,6,5,4,1,2,1,3,4,5,4\}$, you'd notice that you have a slight preference for 4 at the expense of 6. However, you'd probably call this just luck of the draw and that if you did the experiment again, you'd be just as likely to get that 6 is preferred at the expense of some other number. The lack of uniformity is due to sampling variability (chance or luck of the draw, but nothing suggesting that the die lacks balance). Similarly, if you flip a coin four times and get HHTH, you probably won't think anything is fishy. That seems perfectly plausible for a fair coin.

However, what if you roll the die 12,000 or 12 billion times and still get a preference for 4 at the expense of 6, or you do billions of coin flips and find that heads is preferred 75% of the time? Then you'd start thinking that there is a lack of balance and that the lack of uniformity in your observations is not just due to random chance.

There is a statistical hypothesis test to quantify this. It's called Pearson's chi-squared test. The example on Wikipedia is pretty good. I'll summarize it here. It uses a die.

$$H_0: P(X=1) = \cdots = P(X=6) = \frac{1}{6}$$

This means that we are assuming equal probabilities of each face of the die and trying to find evidence suggesting that is false. This is called the null hypothesis.

Out alternative hypothesis is that $H_0$ is false, that some probability is not $\frac{1}{6}$ and the lack of uniformity in the observations is not due to chance alone.

We conduct an experiment of rolling the die 60 times. "The number of times it lands with 1, 2, 3, 4, 5, and 6 face up is 5, 8, 9, 8, 10, and 20, respectively."

For face 1, we would expect 10, but we got 5. This is a difference of 5. Then we square the difference to get 25. Then we divide by the expected number to get 2.5.

For face 2, we would expect 10, but we got 8. This is a difference of 2. Then we square the difference to get 4. Then we divide by the expected number to get 0.4.

Do the same for the remaining faces to get 0.1, 0.4, 0, and 10.

Now add up all of the values: $2.5 + 0.4 + 0.1 + 0.4 + 0 + 10 = 13.4$. This is our test statistic. We test against a $\chi^2$ distribution with 5 degrees of freedom. We get five because there are six outcomes, and we subtract 1. Now we can get our p-value! The R command to do that is "pchisq(13.4,5,lower.tail=F)" (don't put the quotation marks in R). The result is about 0.02, meaning that there is only a 2% chance of getting this level of non-uniformity (or more) due to random chance alone. It is common to reject the null hypothesis when the p-value is less than 0.05, so at the 0.05-level, we can say that we reject the null hypothesis in favor of the alternative. However, if we want to test at the 0.01-level, we lack sufficient evidence to say that the die is biased.

Try this out for an experiment where you roll a die 180 times and get 1, 2, 3, 4, 5, and 6 in the amounts of 60, 15, 24, 24, 27, and 30, respectively. When I do this in R, I get a p-value of about $1.36 \times 10^{-7}$ (1.36090775991073e-07 is the printout).

Now for the shortcut in R. Hover over the hidden text when you think you get the idea of this test and can do it by hand but don't want to.

V <- c(60, 15, 24, 24, 27, 30);chisq.test(V)

This creates a vector of the frequencies (V) and then tests that vector.