Let

- $(E,\mathcal E,\lambda),(E',\mathcal E',\lambda')$ be measure spaces

- $k\in\mathbb N$

- $p,q_1,\ldots,q_k:E\to(0,\infty)$ be probability densities on $(E,\mathcal E,\lambda)$

- $w_1,\ldots,w_k:E\to[0,1]$ with $\sum_{i=1}^kw_i=1$

- $\varphi_1,\ldots,\varphi_k:E'\to E$ be $(\mathcal E',\mathcal E)$-measurable with $(\varphi_i)_\ast\lambda'=q_i\lambda$ (the left-hand side denotes the pushforward measure and the right-hand side the measure with density) for all $i\in\{1,\ldots,k\}$

Suppose we would like to run the Metropolis-Hastings algorithm with target measure $p\lambda$, but it's very complicated to find proposal kernels on $(E,\mathcal E,\lambda)$. On the other hand, we've got easy to implement transformations $\varphi_1,\ldots,\varphi_k$ as above and each $q_i$ is locally a good approximation of $p$.

Now the idea is the following: Let $\zeta$ denote the counting measure on $(\{1,\ldots,k\},2^{\{1,\:\ldots\:,\:k\}})$ and define $$\mu:=\left(w\frac pq\circ\varphi\right)\zeta\otimes\lambda'.$$ We could run the Metropolis-Hastings algorithm with target measure $\mu$ instead! The choice of the $w_i$ and the additional parameter should guarantee that the algorithm prefers to move to states where $q_i$ is a good approximation of $p$.

But how should we choose the weights $w_i$? Is there some "optimal" choice like the balance heuristic in multiple importance sampling?

EDIT: The setting of my actual application is too complicated to describe it here. So, I'll give a toy example: Let $f_{\mu,\:\sigma^2}$ and $\Phi_{\mu,\:\sigma^2}$ denote the density and cumulative distribution function of $\mathcal N_{\mu,\:\sigma^2}$. Take $k=2$, $\mu_i\in\mathbb R$, $\sigma_i,\varsigma_i>0$, $$p:=c_1f_{\mu_1,\:\sigma_1^2}+(1-c_1)f_{\mu_2,\:\sigma_2^2}$$ for some $c\in(0,1)$, $q_i:=f_{\mu_i,\:\varsigma_i^2}$ and let $\varphi_i$ be the quantile function $\Phi_{\mu_i,\:\varsigma_i^2}^{-1}$.

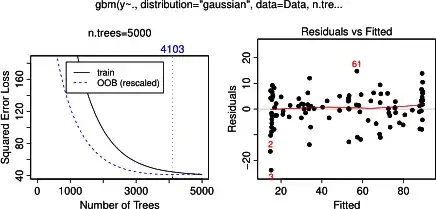

Here's a examp with $\mu_1=-2$, $\mu_2=2$, $\sigma_1=\sigma_2=1$, $\varsigma_1=2$ and $\varsigma_2=5$:

$p$ is the black, $q_1$ is the red and $q_2$ is the green function. Here we could take, for example, to take $w_1=1_{(-\infty,\:0)}$ and $w_2=1_{[0,\:\infty)}$.