I am currently reading the paper "Learning from Logged Implicit Exploration Data" https://arxiv.org/pdf/1003.0120.pdf. But I believe the questions I have can be answered without reading the whole paper, so I would greatly appreciate it if you help me.

The paper considers a contextual bandit problem and wants to do the offline evaluation of a new policy when the logging policy is unknown and thus could be deterministic so that we can't just apply inverse propensity score.

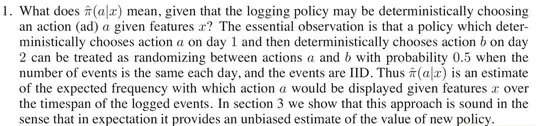

I have 2 questions about the part below.

why can the situation explained be treated as randomizing between actions $a$ and $b$? I understand it is assumed that the number of events is the same each day and the events are IID. But then does that mean it is randomizing because the action that is called action $a$ could have been called action $b$ and used on day 2, instead of day 1? The whole situation is deterministic, so I don't understand why this can be viewed as randomizing.

So the definition of $\hat{\pi}(a|x)$ is simply (the number of the action $a$ displayed given the feature $x$/the total number of actions displayed$)?

Thank you!