Backgrounds

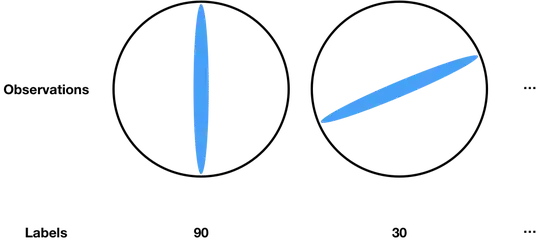

I am working with a dataset, where compass-like images are labeled with their corresponding angles from horizontal line ($0$ degrees).

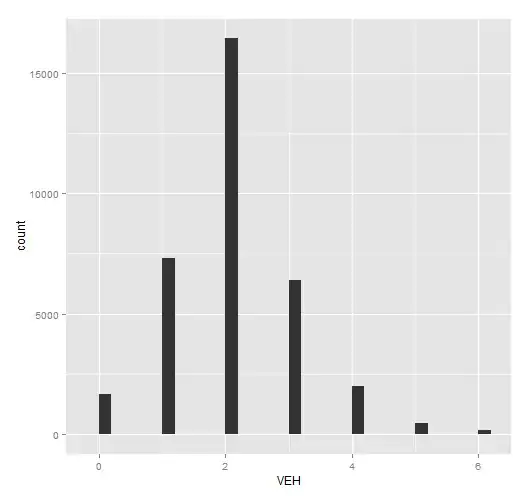

I am trying to make a CNN model to predict the correct label for each input observation. The following is my quick description of the data(train, with labels).

Note that labels have the coding scheme of describing the angle of the stick from the horizon as a real number between $0 \sim 179$ in degrees.

Since angles $\pm 180 n$ for $n=1,2,\cdots$ have exactly the same meanings, the range $0 \sim 179$ was taken in order to make one-to-one correspondence to the picture and angle. For example, the first observation above could have been labeled as $-90, 90, 270, \cdots$ but in order to evade redundancy the range $0 \sim 179$ was taken.

Try 1

I have made a vanilla CNN model using Keras, with the loss : mean_squared_error.

But I think this loss is very inappropriate, since it gives preference to angle $90$, whereas it gives disadvantages to angles $1$ or $179$. To support my argument, let me show you an example:

For angle $90$, the model's try of $88$ or $92$ are both "close tries".

For angle $179$, the model's try of $177$ are considered close, whereas $181$ is considered absurd. Therefore,

So I think this scheme should be evaded.

Try 2

I have defined the following sine loss

$$ l(y_{true},y_{pred}) = \sin\left((y_{true}-y_{pred}) \times \frac{\pi}{180}\right) $$

or in Python code using Keras, I have defined the following custom loss:

def sine_loss(y_true, y_pred):

res = K.sin((y_true-y_pred)*np.pi/180.)

return(K.sum(res*res) )

I have trained the model using the above loss, and cross-validated using 180 angle samples. The result is as follows, where x axis is the ground-truth angle, and y axis is the predicted angle.

It was kind of frustrating to see that the range of predicted values, and I have tried to convert them into $0\sim 179$ space, using the above angle$\pm 180 n$ scheme, but there was a massive white noise.

What loss should I proceed in this situation?