You need to provide more flexibility in modeling your continuous predictor if you suspect a nonlinear relationship between the log-odds of y-class membership and your continuous predictor.

If your subject-matter knowledge suggests a theoretical form for that nonlinear relationship, you could use that general form of the relationship to fit a logistic model.

If you don't have any information based on subject-matter knowledge, then regression splines provide a standard approach. This page shows how to use regression splines for linear regression in Python; although I don't use Python I suspect that the approach will carry over to logistic regression. Restricted cubic splines (restricted to having linear tails to prevent overfitting at the extremes) are a good choice that maintains a low degree for each of the polynomials while allowing for choices of the numbers and locations of knots to model the nonlinearity.

See this page for some discussion in the context of logistic regression. The coefficients for the spline terms would be chosen by maximum likelihood just as the single coefficient for a single-predictor logistic regression model would be. You can test the complexity of the relationship by comparing models with increasing numbers of the knots that serve as anchors in the fitting.

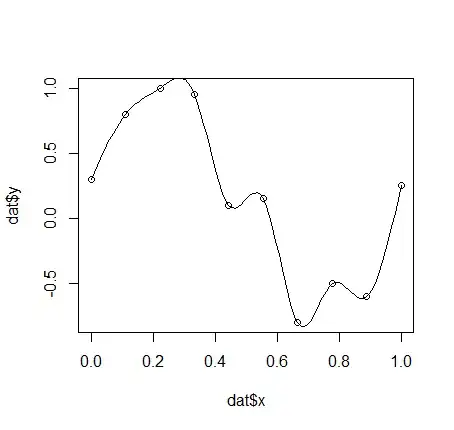

Once you have found a useful spline fit, you just plot the predicted log-odds of y-class membership based on the spline function against the values of the continuous predictor to get your graph.

Added in response to a comment on the original question:

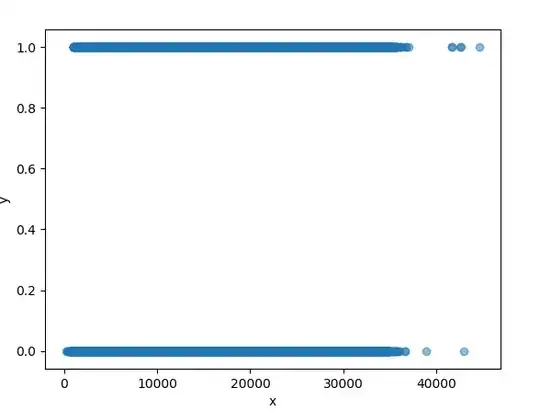

Although grouping observations into bins and then plotting the observed probabilities or log-odds against the midpoints of the predictor values in the bins is one way you could proceed, the graph that you get might depend on just where you set the bin limits (as with any histogram-type approach) and it won't take advantage of the continuous nature of the predictor. If you want to know "what the relationship of the data is" then find a good continuous fit to the data.