I am analysing server data and I have a scenario where I need to get the % by which Y is changed because of a unit change in X:

EDIT: I am doing a Linear Regression in Python (and its other forms like Lasso - ultimate aim is to find feature importances)

My Y is a continuous variable. My Xs are all standardized (meaning : x-xmean/xstd.dev)

Case 1:

ln(y) = a + b (Standardized X)

When X is Increased by 1 standard deviation, then Y increases by b *100 % or [ exp(b) -1 ] *100 %

So when X increases by 1 unit , does Y increases by b*100/std.deviation of X % or [ exp(b) -1 ] *100 / std.dev(X) % ?

or should I un-standardize the coeff and take it as:

% change in Y for 1 standard deviation change in X is [ exp{ b1 / std.dev(X) } -1 ] *100 ?

Case 2:

ln(y) = a + b (Standardized X)

Here X is a % , Eg: % of memory used at the moment, or % of cpu time spent on a job , etc.

How should I interpret % change in Y in this case?

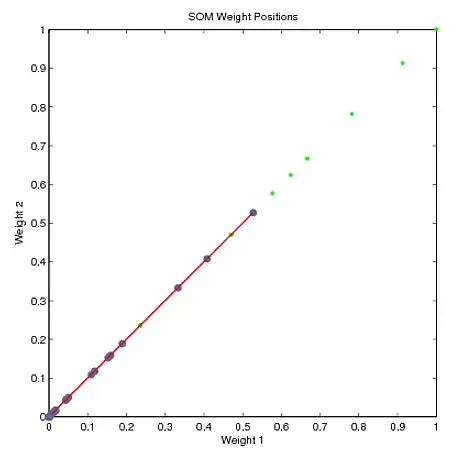

Data in my target (Y) is as shown in the pic below: