I have two statistical tests which are inverse of each other, meaning that the null hypothesis are reversed. I want to use both the tests to take a decision. For this purpose, I am planning to do the following:

- If both the tests point to same result (by, say, rejecting the null hypothesis in test A and not rejecting the null hypothesis in test B, or vice versa), then I go ahead and take that decision

- On the other hand, if the results of the two tests are conflicting, I measure the difference of the p-values from alpha (say, 0.05) for each test, and go with the one having the largest deviation (I have some tie-breaking rules, but let's leave that here)

It sounds reasonable to me, but is there some statistical ground in interpreting p-values like this? I certainly haven't seen similar application before (in my limited exposure).

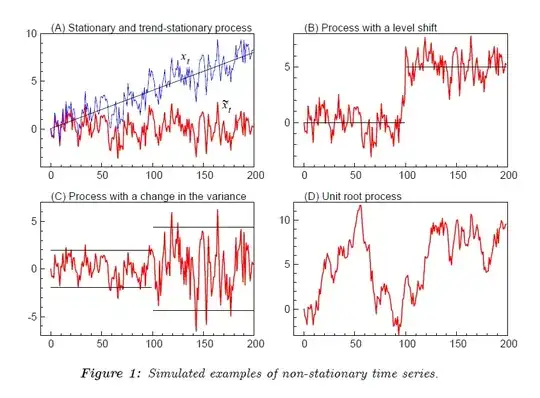

Edit: Let me clarify the question some more with the actual context and tests. I am testing for unit roots in time series, in order to determine if the series needs to be differenced in order to render it mean-stationary. The particular tests are KPSS test, with null being no unit roots, and ADF test with null being an unit root exists. Although the null hypotheses are related (and inverse of each other), but the test regression and the statistics are quite different in my opinion.