I'm learning stacking and start with the approach outlined in Introduction stacking

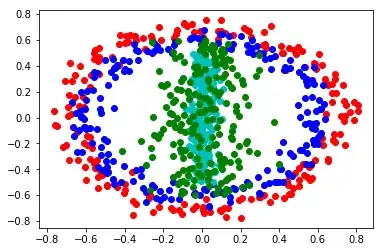

I've plotted the data:

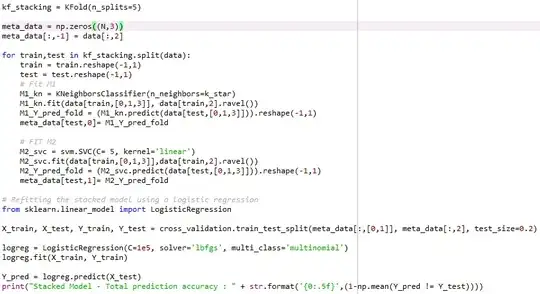

I first would like to check if my algorithm is correct (see below):

So I basically performed 5-Fold CV to generate my test predictions for each base model M1 and M2. The output of this step is a matrix called meta_data of size n*L, with L=2 (2 base models).

Is this fine? I see in some books the following (see below, Z is my meta_data):

What is the rationale for doing this? Is it because after meta_data has been built, we have actually KxL fitted models in total (L=2 fitted models for each fold), but eventually they need to be fit on the FULL training data, so that the stacked model knows where each base model performs well or poorly?

My second question is the following:

With my algorithm, I do CV to estimate the test error on 20% of the data. I use a logistic regression for the stacked model and my test error rate is 69%.

However, the test error rate of M1 alone is 79% and for M2 alone it's 70%. I also checked the correlation of the predictions done my M1 and the ones done by M2: it's roughly 80%.

Based on it, and if my algorithm is fine, is it normal to have worse performance on the stacked model?