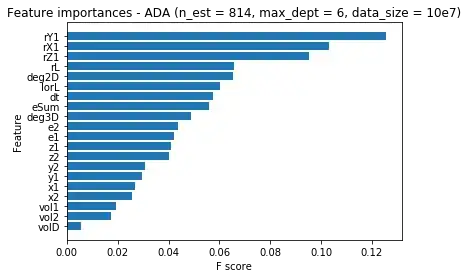

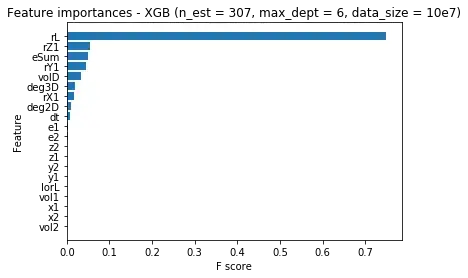

I try to compare XGBoost and AdaBoostClassifier (from sklearn.ensemble) feature importances charts.

From this answer: https://stats.stackexchange.com/a/324418/239354 I get know that AdaBoostClassifier give us feature_importances_ based on gini importance:

model.feature_importances_

From this article: https://towardsdatascience.com/be-careful-when-interpreting-your-features-importance-in-xgboost-6e16132588e7 I get know that using:

model.get_booster().get_score(importance_type = 'gain')

I will receive feature importances based on the same metrics. But I have received two so different charts:

What is more suprising for me is that when I choose importance_type as 'weight' then the new chart for XGBoost is so much more similar to the one for AdaBoostClassifier:

I think I am making mistake somewhere. I am sure that I sorted feature importances for XGBoostClassifier correctly (cause they have random order). Is importance_type = 'gain' the same as gini importance?

EDIT: I made a test with wdbc dataset (https://datahub.io/machine-learning/wdbc) and I think that the difference in feature importances beetwen AdaBoost and XGBoost result from learning algorithms differences. So it's hurt to compare feature importances beetwen them even using the same metrics. Code here (python3.6):

from xgboost import XGBClassifier

import pandas as pd

from sklearn.ensemble import AdaBoostClassifier

from sklearn.tree import DecisionTreeClassifier

import numpy as np

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

dataDirectory = '/mnt/home/jbielecki1/test_env_dir/p3test/cancerData/wdbc.csv'

data = pd.read_csv(dataDirectory)

x = data.iloc[:,:30]

y = data['Class']

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size = 0.3, train_size = 0.7, random_state = 42, stratify = y)

print("Length of dataset: " + str(len(data)))

print("Starting accuracy: " + str(len(y[y == 1])/len(data)*100) + "%")

print("Starting train accuracy: " + str(len(y_train[y_train == 1])/len(y_train)*100) + "%")

print("Starting test accuracy: " + str(len(y_test[y_test == 1])/len(y_test)*100) + "%")

modelAda = AdaBoostClassifier(

base_estimator = DecisionTreeClassifier(max_depth = 2),

algorithm = 'SAMME.R',

n_estimators = 20,

learning_rate = 0.2

)

modelXGB = XGBClassifier(

objective = 'binary:logistic',

booster = 'gbtree',

subsample = 1,

n_estimators = 20,

max_depth = 2,

learning_rate = 0.2

)

modelXGB.fit(x_train, y_train)

modelAda.fit(x_train, y_train)

def plotFeatureImportances(features, importances, modelName):

y_pos = np.arange(features.size)

plt.clf()

indexes = np.argsort(importances)

plt.title("Feature importances - " + modelName)

plt.barh(y_pos, np.sort(importances))

plt.yticks(y_pos, features[indexes])

plt.xlabel('F score')

plt.ylabel("Feature")

plt.show()

def getXGBFeatureImportances(importance_type, modelXGB):

feature_importances = modelXGB.get_booster().get_score(importance_type = importance_type)

feature_importances_values = list(modelXGB.get_booster().get_score(importance_type = importance_type).values())

feature_importances_sum = sum(feature_importances_values)

feature_importances_values_norm = [x/feature_importances_sum for x in feature_importances_values]

correct_feature_importances = dict(zip(

list(modelXGB.get_booster().get_score(importance_type = importance_type).keys()),

feature_importances_values_norm

))

for key, value in feature_importances.items():

correct_feature_importances[key] = value/feature_importances_sum

correct_names_feature_importances = dict(zip(

["f" + str(x) for x in range(len(feature_importances_values))],

correct_feature_importances.values()

))

return correct_feature_importances

plotFeatureImportances(x_test.columns, modelAda.feature_importances_, 'ADA - gini')

xgb_feature_importances_gain = getXGBFeatureImportances('gain', modelXGB)

xgb_feature_importances_gain_keys = pd.Index(list(xgb_feature_importances_gain.keys()))

xgb_feature_importances_gain_values = list(xgb_feature_importances_gain.values())

plotFeatureImportances(xgb_feature_importances_gain_keys, xgb_feature_importances_gain_values, 'XGB - gain')

xgb_feature_importances_weight = getXGBFeatureImportances('weight', modelXGB)

xgb_feature_importances_weight_keys = pd.Index(list(xgb_feature_importances_weight.keys()))

xgb_feature_importances_weight_values = list(xgb_feature_importances_weight.values())

plotFeatureImportances(xgb_feature_importances_weight_keys, xgb_feature_importances_weight_values, 'XGB - weight')