For OLS-estimators in multivariate regression analysis, it logically doesn't matter whether an error is positive or negative. I was wondering if in some situations it might make sense to weight a positive error less than a negative error (or maybe the other way around). So something like a intentionally optimistic/pessimistic estimation. So I tried to develop something like this myself, but since I'm not a mathematician or statistician, I didn't quite manage it and can't judge very well if something like this really makes sense, or maybe already exists. This is what i got so far:

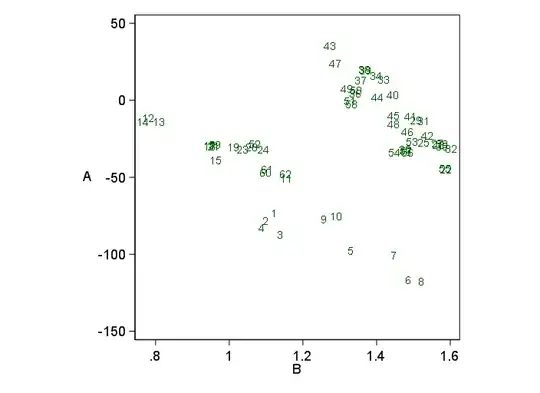

Instead of the error being \begin{equation} SQR_1(\beta) = ||y-X\beta||^2 \end{equation} I was thinking of something like the function: \begin{equation} f(x) = ax^2\cdot s(x) + bx^2\cdot s(-x) \end{equation} where: \begin{equation} s(x) = \frac{1}{2}\cdot( sgn(x)+1) \end{equation} and $a$ and $b$ are parameters weighting the positive/negative errors. $f(x)$ with for example $a=0.2$ and $b=1$ looks like this:

Instead of $SQR_1$ we can now define:

\begin{equation}

SQR_2(\beta) = f(y-X\beta)

\end{equation}

Instead of $SQR_1$ we can now define:

\begin{equation}

SQR_2(\beta) = f(y-X\beta)

\end{equation}

I know that I now have to solve $\frac{\partial f(y-X\beta)}{\partial \beta} = 0$ for $\beta$. And at this point, I'm not getting any further. And even if I solve this equation, wouldn't there be the next problem to prove that the extrema is a minima because the second derivative of my function is not defined for $x=0$?

So my questions: Do you know how to solve this equation? Do you think that anything I was doing here was useful? Does something like this already exist?

b8d6fffbe062810e1ed8812074bdafedf24b72bf24b8418d7787a85e41f8a0d0