I have feedforward neural net, trained on cca 34k samples and tested on 8k samples. There is 139 features in dataset. The ANN does classification between two labels, 0 and 1, so I am using sigmoid function in last layer and two hidden layers, both with 400 units. NN is created using following Keras code:

model = Sequential()

model.add(Dense(units=139, input_dim=len(list(X))))

model.add(Dense(units=400))

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(units=400))

model.add(Activation('relu'))

model.add(Dropout(0.2))

model.add(Dense(units=1))

model.add(Activation('sigmoid'))

model.compile(optimizer='sgd',

loss='binary_crossentropy',

metrics=['accuracy'])

checkpoint = ModelCheckpoint('tempmodelKeras.h5',period=1)

custom = LambdaCallback(on_epoch_end=lambda epoch,logs: test_callback_wrapper())

model.fit(X, y, epochs=500, batch_size=128, callbacks=[checkpoint,custom])

test_callback_wrapper() is just for testing model after each epoch on test dataset and then calculating average precision score for different thresholds.

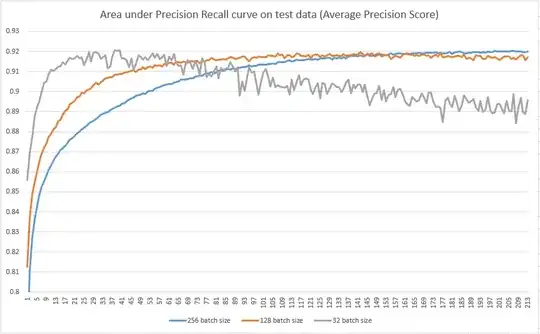

Now the part I need to help with: Following images shows epoch number on X axis, and average precision on Y axis, for test set.

I tried to three different batch sizes (32, 128, 256), but if you look at the plot, it looks that smaller batch sizes are 'faster' in terms of number of epoch needed to reach maximum average precision, but are more prone to overfitting. However, I read several articles, where was written that larger batches usually leads to overfitting and smaller batches are better. How is it possible that in my image, it looks vice versa, that larger batches perform better?