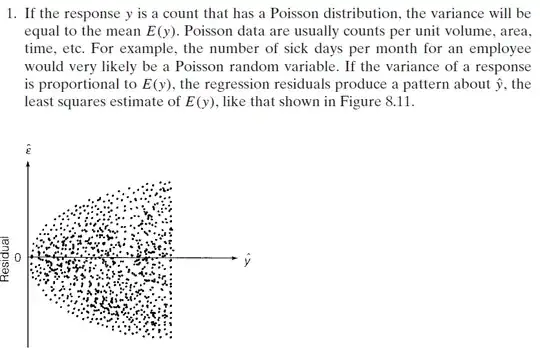

I'd like some intuition on a question that has long confused me. Suppose we have a data-generating process

$$y_i = x_i' \beta + \varepsilon_i $$

where $\mathbb{E}[\varepsilon_i] = 0$, $\varepsilon_i \perp x_i$, and $\varepsilon_i$ is drawn i.i.d. from a probability distribution $U$.

When we regress $y$ on $x$ in a finite sample, we will get a set of residuals $\hat{\varepsilon}_i$. My question is, do these residuals tell us anything about $U$?

It seems obvious that the greater the variance of $U$ (i.e. when the DGP is noisier), the greater we can expect the empirical variance of $\hat{\varepsilon}_i$ to be, so clearly they must be somewhat related. Moreover, if you indeed had $y$ and $x$ for the entire population, there would be no estimation error, so the empirical distribution of $\hat{\varepsilon}_i$ would match $U$ exactly. So, for finite samples, what can residuals tell us about the shape of the unknown distribution $U$?