This question is motivated by the discussion of this earlier question.

I have two samples $X$ and $Y$, where both samples have $n$ elements. Both samples represent optimal solutions returned from two different stochastic optimisation solvers on the same optimisation problem: results from one solver are in sample $X$, and results from the other solver are in sample $Y$. Each element in the samples are independent of each other, and both samples are independent of each other.

I am wondering what the appropriate method is to compare samples $X$ and $Y$ to detect if one solver tends to provide better optimal solutions than the other solver for the particular optimisation problem.

I have two ideas:

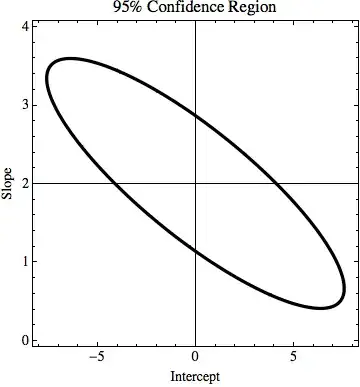

estimate a sample mean $\bar{d}$ of the element-wise differences of $X$ and $Y$ and check if the 95% confidence interval of this sample mean includes zero.

estimate a sample statistic $\bar{m}$ of the difference in sample means of $X$ and $Y$ and check if the 95% confidence interval of this sample statistic includes zero.

For either idea, we assume that one solver is better than the other solver if zero does not fall within that idea's estimated confidence interval of the relevant sample statistic.

In the first idea, I think the algorithm would be:

- produce a single bootstrap resample with replacement of $X$, called $X^{*}$ with $n$ elements

- produce a single bootstrap resample with replacement of $Y$, called $Y^{*}$ with $n$ elements

- take the element-wise difference between $X^{*}$ and $Y^{*}$ to give $D^{*}$

- calculate a resample mean $\bar{d}^{*}$ of $D^{*}$ and store $\bar{d}^{*}$

- repeat steps 1 to 4 a large number of times (say 10000) to give a set of $\bar{d}^{*}$ values

- use the empirical distribution of the $\bar{d}^{*}$ values to estimate the 95% confidence interval of the sample mean difference $\bar{d}$

For the second idea, I think the algorithm (rewritten from this question) would be:

- produce a single bootstrap resample with replacement of $X$, called $X^{*}$ with $n$ elements

- produce a single bootstrap resample with replacement of $Y$, called $Y^{*}$ with $n$ elements

- calculate a resample mean $\bar{x}^{*}$ for $X^{*}$

- calculate a resample mean $\bar{y}^{*}$ for $Y^{*}$

- take the difference $\bar{m}^{*}$ between $\bar{x}^{*}$ and $\bar{y}^{*}$, and store $\bar{m}^{*}$

- repeat steps 1 – 5 a large number of times (say 10000) to give a set of $\bar{m}^{*}$ values

- use the empirical distribution of the $\bar{m}^{*}$ values to estimate the 95% confidence interval of the sample statistic $\bar{m}$

I believe that idea 1 and idea 2 are nonparametric bootstrap approaches which are analogous to the paired samples t-test and the independent samples t-test respectively. I think that the second idea is the more appropriate idea, since I think the first idea assumes the specific pairings of elements between $X$ and $Y$ are important when they are in fact not important.

Of course, my post assumes that looking at means is the most sensible way of comparing results; I am open to discussion over other point statistics or interval statistics that more knowledgable people may be aware of.

Am I right in my thinking that the second idea is the appropriate way of comparing the optimal solutions provided by these two solvers on the same optimisation problem?