We all know that logistic regression is used to calculate probabilities through the logistic function. For a dependent categorical random variable $y$ and a set of $n$ predictors $\textbf{X} = [X_1 \quad X_2 \quad \dots \quad X_n]$ the probability $p$ is

$$p = P(y=1|\textbf{X}) = \frac{1}{1 + e^{-(\alpha + \boldsymbol{\beta}\textbf{X})}}$$

The cdf of the logistic distribution is parameterized by its scale $s$ and location $\mu$

$$F(x) = \frac{1}{1 - e^{-\frac{x - \mu}{s}}}$$

So, for $\textbf{X} = X_1$ it is easy to see that

$$s = \frac{1}{\beta}, \quad \mu = -\alpha s$$

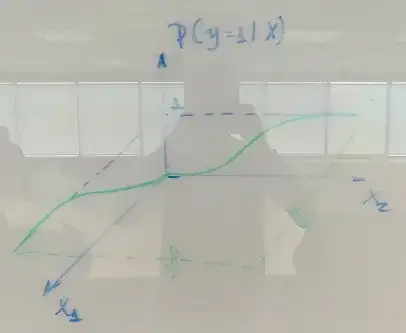

and this way we map the two fashions of the sigmoid curve. However, how does this mapping works when $\textbf{X}$ has more than one predictor? Say $\textbf{X} = [X_1 \quad X_2]$, what I see from a tri-dimensional perspective is depicted in the figure below.

So, $\textbf{s} = [s_1 \quad s_2]$ and $\boldsymbol{\mu} = [\mu_1 \quad \mu_2]$ would become

$$\textbf{s} = \boldsymbol{\beta}^{-1}, \quad \boldsymbol{\mu} = -\alpha\textbf{s}$$

and $p$ would derive from the linear combination of the parameters and the predictors in $\textbf{X}$. The way the unknown parameters of the logistic regression function relate to the cdf of the logistic distribution is what I am trying to understand here. I would be glad if someone could provide with insights on this matter.