I have a machine learning problem and have been working in Sklearn/Pandas with Python to come up with an accurate model. I find myself deep in a rabbit hole trying to learn the best approach and how many variables are too many variables while trying to avoid overfitting.

Each model is for a different area with the variables indicated below:

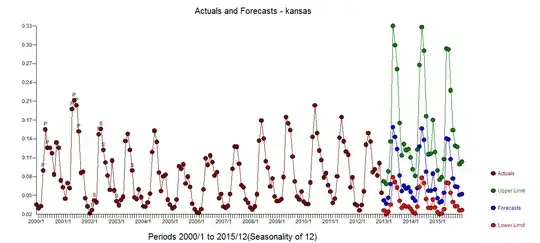

x = monthly precipitation departure (this can be used as overall monthly averages over an area, or can be broken down into sub-areas from the overall area of interest to add additional variables) For example Kansas Group 1 can be treated as a whole or could be separated into sub-areas with monthly averages for each area.

y = monthly availability of a resource (eg. Jan = 0.003827)

n = 16 years or 192 months of data

I have tried many different approaches.

The first approach was modeling each month separately so a model for January (n=16) and a model for February (n=16) etc. using the following modeling techniques:

- LinearRegression using my own assigned weighting variables as a

weighted running mean analysis

- RandomTrees with tuning variables

- RandomForest with tuning variables

- ExtraTrees with tuning variables

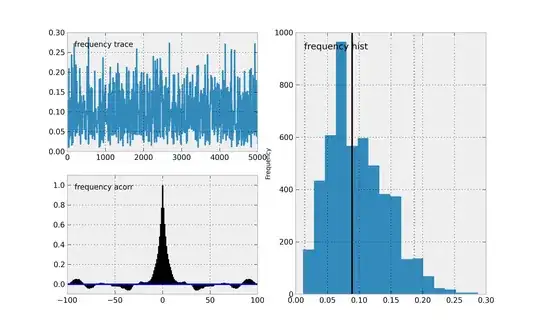

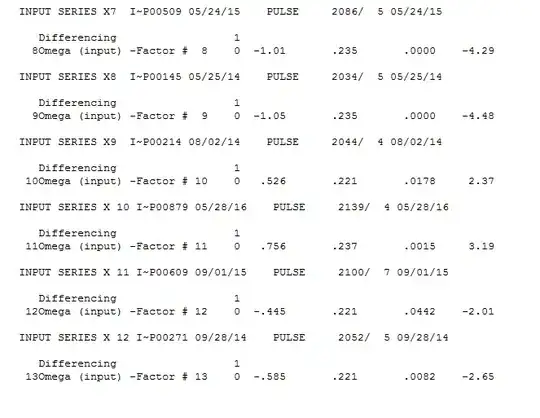

Then in order to try and improve the model, I most recently employed a time series model (n=192) - SARIMAX with tuning variables p,d,q,P,D,Q,12

Any advice or resources are greatly appreciated.