I have generated a dataset of artificial data and want to distinguish two labels from each other using a random forest.

I thought having correlated features in my dataset will decrease the algorithms accuracy, so I identified the correlated features in the heatmap below and remove all but one of them. So that I am left with "unique" features only.

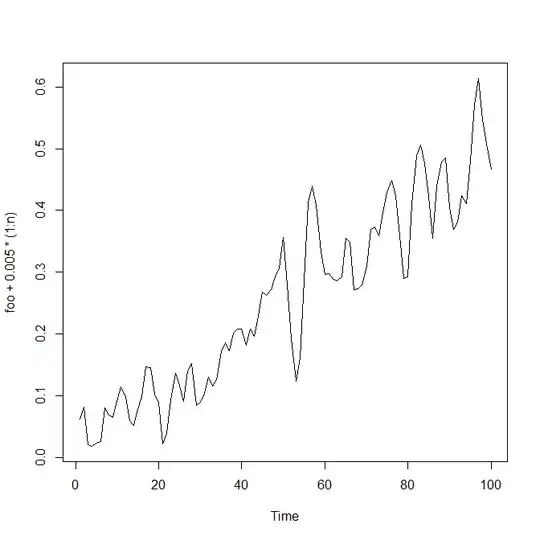

However when I plot the accuracy of the forest over the number of features used for training and testing, then in the case of having deleted the correlated features I reach overall decreased accuracy (Hence the two pictures at the right).

The two posts (Won't highly-correlated variables in random forest distort accuracy and feature-selection? and Selecting good features) seem two concern similar issues, but I do not quite understand how they apply to me.

How can I remove the correlation from my dataset and reach an increase in accuracy?