I disagree somewhat with the first answer by @Daniel Lopez.

Tests. Most crucially, $t$ tests can work quite well even if the approximation to normal distributions is rough. You're showing the distribution of one group, but not the other, and have not explained whether the groups are paired or not. If paired, then it's the distribution of the differences that is germane.

Either way, I'd wager -- given what you show -- that the results of a $t$ test will be consistent with those of a (Wilcoxon-)Mann-Whitney test, while delivering also concrete results such as a confidence interval for the difference of means, which is much more likely than a significance test to be scientifically or practically interesting and useful.

There is no great harm in doing both tests.

Transformations. I am a big fan in general but on the evidence of your graph -- we need more evidence -- it is possibly not worth the complication. If you jump the other way, then cube roots preserve sign while pulling in tails, so that negative, zero and positive values remain that way. In practice, your software will need to be told how to handle varying sign with some incantation such as sign(x) * abs(x)^(1/3). That said, a transformation such as cube root is not very common, and it's likely that colleagues in your science will react with puzzlement or even hostility to a transformation they have not heard of. (Many scientists combine enthusiasm for new methods for producing data with resistance to any method for analysing data they didn't learn before early graduate school, and I am not a statistician saying that.)

Although the evidence you give does not indicate to me that a transformation is imperative, I would like to comment on an all too common prejudice against transformations. Choosing a transformation isn't changing your data: it is just looking at them on a different scale. Any scientist who has ever used pH, or decibels, or the Richter scale, for example, has already used a transformed scale as a matter of convention or convenience. (All of those examples are logarithmic scales.) Any one who has looked at an atlas has seen different projections in operation, one often being (much) better than another, depending on purpose and the area being shown. Choosing a projection does not change or deny the shape of the planet, which remains exactly as it was before you chose a map to help your thinking. So also with transformations....

Graphics. Histograms are helpful, but by far the most useful graphical way to assess normality, or the degree of lack thereof, is a normal quantile plot, historically often called a normal probability plot or normal scores plot. As mentioned, if your groups are paired, then such a normal quantile plot for differences is advisable. I would put less stress than you do on the apparent outliers: slight positive skewness is par for the course in many fields and the highest values can be perfectly natural, more akin to basketball players than invading giants who are threatening havoc.

Given your data, I would want first to go beyond histograms. Many threads here are pertinent. These links will suggest others:

How to visualize independent two sample t-test?

Interpreting QQplot - Is there any rule of thumb to decide for non-normality?

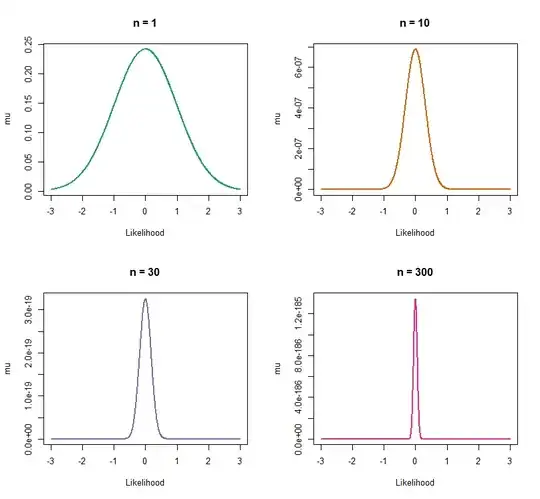

Note. You have hundreds of measurements, it seems. Even trivial differences will qualify as significant at conventional levels. The real question is the magnitude of the differences, where Wilcoxon-Mann-Whitney cannot help you.