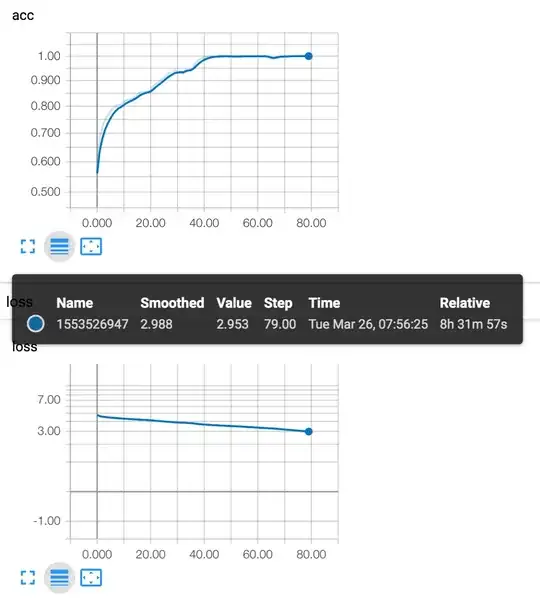

I am training a binary classification algorithm in Keras, the loss is cross-entropy model.compile(loss='binary_crossentropy', optimizer=optimizer, metrics=['accuracy']). What confused me most is that my train_acc and train_loss are not consistent. Sometimes train_acc is 1 but train_loss is near 3.

If I think right, if train_accuracy is 1, train_loss should not be greater than 0.693, which is the cross-entropy loss for all predictions are 0.5.

I have checked the data, the label is 0 for false and 1 for true. The other dataset works well with the same code. What can be the possible reason for this uncommon problem? or am I wrong?

I uploaded a picture for illustration. But it does not show up, maybe a bug.