I'm doing a little self study project, and am trying to implement OLS, Ridge, and Lasso regression from scratch using just Numpy, and am having problems getting this to work with Lasso regression.

To check my results I'm comparing my results with those returned by Scikit-Learn.

The coefficients for OLS can be derived from the following expression:

In numpy, this can be implemented in the following way:

np.linalg.inv(X.T @ X) @ (X.T @ y)

If I use the boston housing dataset from scikit learn with this equation my coefficients match what's returned from scikit learn exactly.

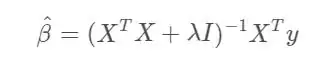

The coefficients from Ridge Regression can be represented with the following expression:

In numpy this can be represented in the following way:

np.linalg.inv(X.T @ X + alpha * np.identity(X.shape[1]) @ (X.T @ y)

Again, if I plug in the appropriate variables for X, y and alpha, my coefficients match what's output by Scikit Learn's Ridge class.

However, I run into large discrepancies when I try to match the results from its Lasso.

I believe the coefficients for Lasso Regression can be derived in the following way:

I haven't seen this coded anywhere on the web, but I assume its implementation would look like this:

np.linalg.inv(X.T @ X) @ (X.T @ y - alpha*np.ones(X.shape[1]))

This returns results, but they do not match what's in scikit learn, and it's not particularly close. If I run a Lasso regression with alpha set to 10 in scikit learn on the boston dataset half of the coefficients are shrunk to zero. Whereas with my implementation they are shrunk only slightly.

What mistake am I making in getting Lasso to work?

Thank you.