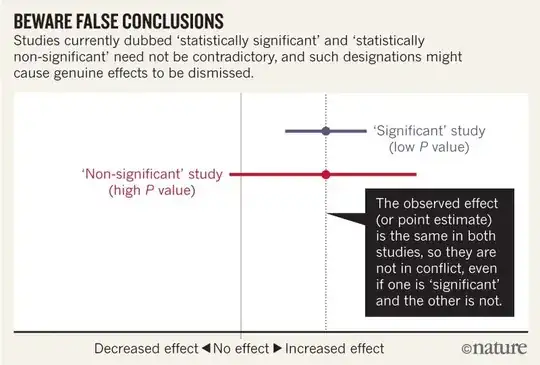

The title of the Comment in Nature Scientists rise up against statistical significance begins with:

Valentin Amrhein, Sander Greenland, Blake McShane and more than 800 signatories call for an end to hyped claims and the dismissal of possibly crucial effects.

and later contains statements like:

Again, we are not advocating a ban on P values, confidence intervals or other statistical measures — only that we should not treat them categorically. This includes dichotomization as statistically significant or not, as well as categorization based on other statistical measures such as Bayes factors.

I think I can grasp that the image below does not say that the two studies disagree because one "rules out" no effect while the other does not. But the article seems to go into much more depth than I can understand.

Towards the end there seems to be a summary in four points. Is it possible to summarize these in even simpler terms for those of us who read statistics rather than write it?

When talking about compatibility intervals, bear in mind four things.

First, just because the interval gives the values most compatible with the data, given the assumptions, it doesn’t mean values outside it are incompatible; they are just less compatible...

Second, not all values inside are equally compatible with the data, given the assumptions...

Third, like the 0.05 threshold from which it came, the default 95% used to compute intervals is itself an arbitrary convention...

Last, and most important of all, be humble: compatibility assessments hinge on the correctness of the statistical assumptions used to compute the interval...