I've been coding up a neural network package for my own amusement, and it seems to work. I've been reading about Adam and from what I've seen it's very difficult to beat.

Well, when I implement the Adam algorithm in my code it does terribly - converging very slowly or even diverging for some of the problems I've tested. It seems like I must have made an error, but the algorithm is pretty straightforward.

To cut out the possibility of some programming error, I decided to create a very simple function in Excel and compare Adam to standard gradient descent. From what I can see, standard gradient descent works better pretty consistently for lots of parameters (at least for relatively simple, deterministic functions). Adam seems to converge much more reliably regardless of what you feed it, but is consistently slower.

However - what I've read pretty consistently paints Adam as a panacea that converges significantly faster than any other algorithm in pretty much all situations. So what gives?

Does it only outperform other algorithms on sufficiently complex problems? Do the hyperparameters need to be tuned more carefully? Do I need to look at my network architecture more carefully if I'm not getting convergence? Are there certain activation functions that make it perform especially poorly? Or maybe I've just straight up implemented the algorithm incorrectly?

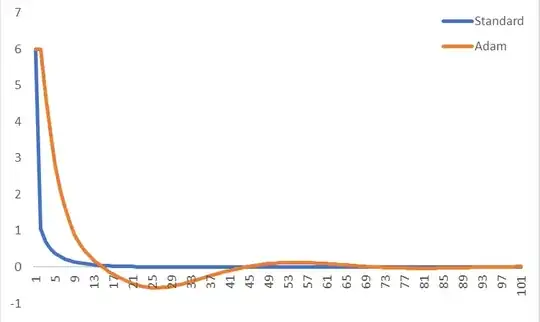

Here's an example where I compared standard gradient descent to Adam for x^2 + x^4, using a learning rate of 0.1 (and using 0.9, 0.999 and 1e-8 for the other Adam parameters). I've just plotted the gradient at each iteration, starting both off at x=1. Adam is slower to converge for this simple function for small learning rates, but it will converge for every learning rate I've tested (whereas standard gradient descent struggles to converge for learning rates over about 0.3). Does this look right or does it look like I've got something wrong?

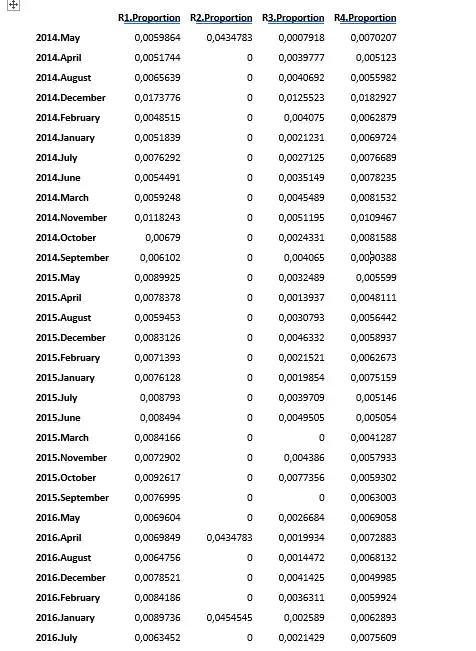

Here's the intermediate variables for a few iterations of Adam:

I (perhaps naively) expected that I would just plug the Adam algorithm into my code with a stock set of parameters, and everything would just speed up. What am I missing here?

Thanks for any help!