So I run a logistic regression and decision tree model using same data. The accuracy shows that the decision tree outperforms logistic slightly. However, my ROC curve shows that logistic is much better than decision tree. How could this happen?

Asked

Active

Viewed 737 times

2

kjetil b halvorsen

- 63,378

- 26

- 142

- 467

YY_H

- 31

- 1

-

1In general, you shouldn't use accuracy to evaluate a model (see: [RMSE (Root Mean Squared Error) for logistic models](https://stats.stackexchange.com/q/172945/7290)). That said, those values are awfully close; how much data do you have in the test set? Are the ROC curves also from the test set? – gung - Reinstate Monica Mar 12 '19 at 16:55

-

Yes you are right, but the homework asks me to calculate both the accuracy and ROC and interpret the result, and I don't understand why the accuracy and ROC don't match with each other. There are 615 data in my test set. I used the test set for both the accuracy and ROC. – YY_H Mar 12 '19 at 18:06

-

If your test set N was 615, the accuracy numbers appear to correspond to 46 & 51 misclassifications, respectively. Is it reasonable to think that that difference is reliable? You need the 2x2 table of misclassifications by the 2 models to conduct McNemar's test to see if there's a difference (see: [Compare classification performance of two heuristics](https://stats.stackexchange.com/q/185450/7290)), however, an exact 95% CI for the proportion 0.0829 is [0.0624, 0.1076], which overlaps the accuracy for CART. – gung - Reinstate Monica Mar 12 '19 at 18:22

-

1Related: https://stats.stackexchange.com/questions/358101/statistical-significance-p-value-for-comparing-two-classifiers-with-respect-to/358598#358598 – Sycorax Mar 12 '19 at 18:27

-

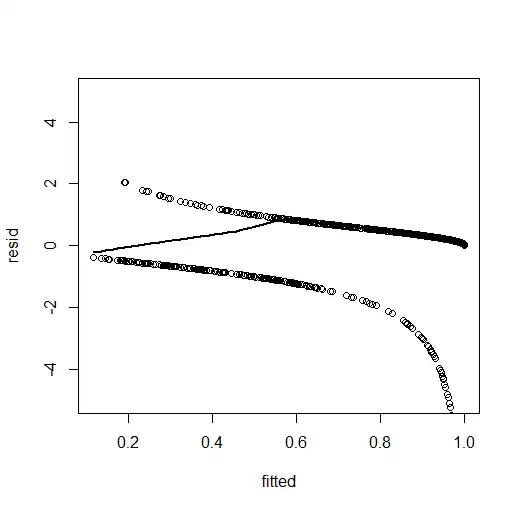

4Isn't the issue here that the ROC curve for the decision tree is created based on just one point? Hard to compare between ROC curves when you are not creating them in the same manner – astel Mar 12 '19 at 18:30