Searching through Wikipedia and StackExchange I managed to understand that, for a set of $N$ normally distributed values, the unbiased variance $\textrm{Var}[s^2]$ of the unbiased variance $s^2$ of the distribution is given by

$$\textrm{Var}[s^2] = \frac{2\sigma^4}{N - 1},$$

where $\sigma^2$ is the biased variance of the normal distribution. Since its square root is a measure of the deviation of $s^2$ from the expected value of its distribution, is it correct to say that the deviation of $s = \sqrt{s^2}$ from its expected value is, according to error propagation,

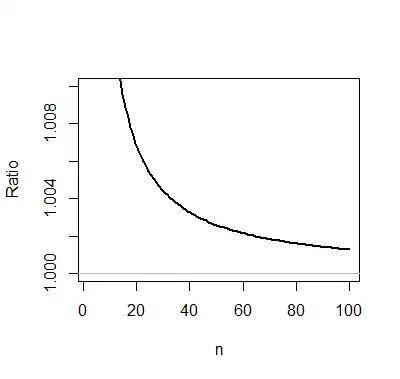

$$\delta s = \frac{\sigma}{\sqrt{2(N -1)}} \quad ?$$

EDIT: From this answer, it seems that the deviation from the deviation is

$$ \delta s = \sigma \sqrt{ 1 - \frac{2}{N-1} \cdot \left( \frac{ \Gamma(N/2) }{ \Gamma( \frac{N-1}{2} ) } \right)^2 }; $$

since this result is rather convincing, where's the fault in my own reasoning?