I'm running XGBoost to predict prices on a cars dataset, I was wondering what alternatives are there for this kind of problem where smaller values are overestimated and higher prices underestimated.

I tried applying log to prices since it has a skewed to the right distribution, but still having this undesirable effect.

Also, as a bonus question, log(price) improved the prediction score, the mean relative error or MRE calculated as mean(ABS(RD)) by 2 percent, if anyone has the intuition onto why this could have happened that would be great.

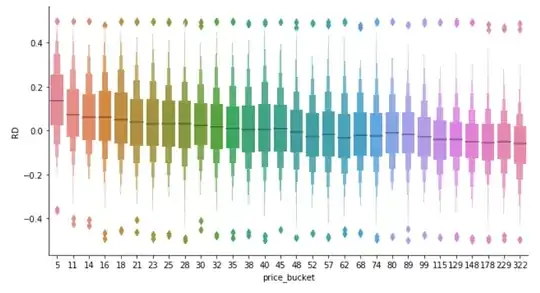

In the image below RD is the relative difference between predictions and the actual values, and the price bucket is a bucketized variable where the number indicates the price low interval bound over 1000.