Going through this lecture note on bias-variance trade-off, I didn't follow the latter part of this paragraph.

It shows the common situation in practice that

(1) for simple models, the bias increases very quickly, while

(2) for complex models, the variance increases very quickly. Since the riskiness is additive in the two, the optimal complexity is somewhere in the middle. Note, however, that these properties do not follow from the bias-variance decomposition, and need not even be true.

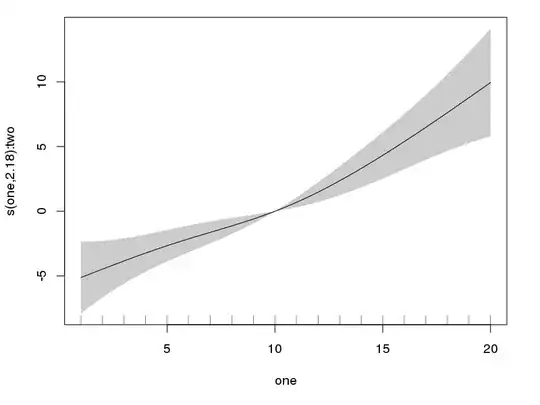

The 'It' in the above paragraph refers to the below image:

Questions:

1) If these are properties then why don't they follow from the bias variance decomposition, which states that: $ E[(Y-\hat{f}(x))^2] = \sigma^2 + \text{Bias}^2 + \text{Var}(\hat{f}(x))$.

2) And, under what conditions are they not true?