(Full disclosure, this is a follow-up to this question, which wasn't completely answered on StackOverflow)

The input dataset is a time series of some stock price movement, but it might as well be random. I am trying to configure a Keras neural network to completely overfit (i.e. memorize the data). That's obviously just a first step.

train_gen = keras.preprocessing.sequence.TimeseriesGenerator(

x,

y,

length=10,

sampling_rate=1,

batch_size=1,

shuffle=False

)

model = Sequential()

model.add(LSTM(128, input_shape=(10, 1), return_sequences=False))

model.add(Dense(1))

model.compile(

loss="mse",

optimizer="rmsprop",

metrics=[keras.metrics.mean_squared_error]

)

history = model.fit_generator(

train_gen,

epochs=100,

steps_per_epoch=100

)

y_pred = model.predict_generator(gen)

plot_points = 100

epochs = range(1, plot_points + 1)

pred_points = numpy.resize(y_pred[:plot_points], (plot_points,))

target_points = gen.targets[:plot_points]

plt.plot(epochs, pred_points, 'b', label='Predictions')

plt.plot(epochs, target_points, 'r', label='Targets')

plt.legend()

plt.show()

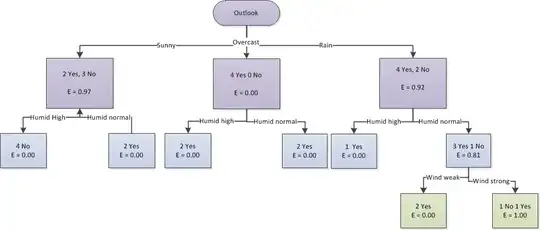

I would expect the network would just memorize such a small dataset (it sees just the first 100 data points thanks to steps_per_epoch=100 if I understand it correctly) and the loss on the training dataset would be pretty much zero. It is not and the vizualization of the results on the training set looks like this:

I may be displaying the results wrong because I don't shift targets compared to predictions (see the StackOverflow answer for details). But that doesn't account for the failure to overfit. Once I manage to overfit the model, the shift should be clearly visible in the chart.

Any ideas why the model doesn't overfit? Thanks.

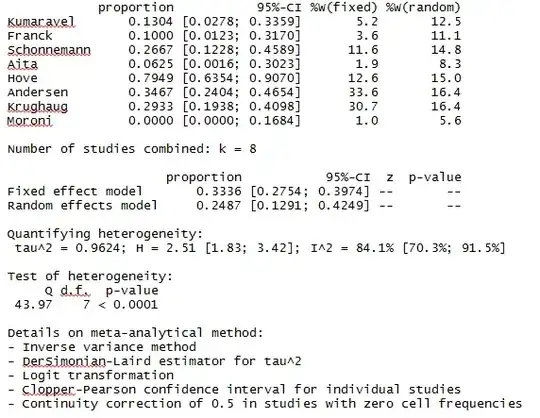

EDIT (Training and validation loss in 100 epochs):