Is there a systematic method for Logistic Regression to do transformations on the independent variables, in order to conclude that the most optimal logistic regression model is fitted?

Illustration of my question:

I have 2 independent variables (strength and minute both on a scale of 0 to 100) and the dependent variable is a binary outcome: win or lose.

I have done a logistic regression (in R with glm) to estimate the probabilities.

Since I already have the probabilities (based on an interpolated pivottable from a very large dataset, see picture below);

I can compare these with the probabilities from the logistic regression, see picture below:

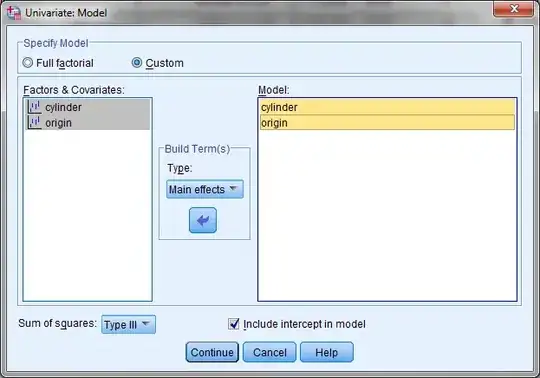

I conclude that the logistic regression does not capture the non-linearity. For that reason I have added several transformations to the independent variables such as an interaction term, 2nd, 3rd and 4th power.

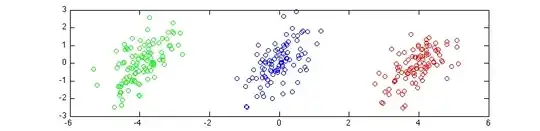

These additions result in a slight improvement measured by AUC after splitting the dataset and performing cross validations. Although visual the 4th power (red) is a deterioration compared to the 3rd power transformation (green); see picture below with the outcomes of 1 vector of the matrix with strength 50, deducted from the similar vector from the pivot table).

Do I have to conclude that Logistic Regression is not flexible enough for my data or that further improvement is possible by doing other transformations? In that case, is there a systematic structured method to do those transformations, in order to conclude that the most optimal model is fitted as possible by Logistic Regression?

PS: if there are any incorrect steps or conclusions above, pls let me know.