There are a number of statistics and functions for defining outliers.

Assuming a normal distribution, you can standardize your data according to the sample mean and sample standard deviation. Assign a z-score to each observation.

Given that you have a bivariate data set, and that you want to know the outliers conditional on disk space, I would recommend doing a linear regression.

If you have access to the data, I would recommend doing a residual analysis in R.

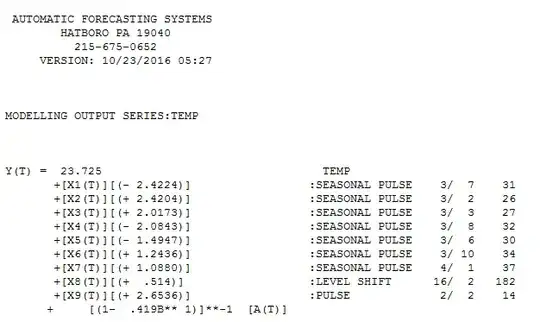

Build a linear model using memory as a function of disk space. The linear model works best under assumptions of normality of variables. Then, you're looking for large positive residuals. (Positive because you're looking for outliers that use a lot more memory.)

library(car)

set.seed(1234)

#generating data

disk <- c(rep(.01, 25), rep(.05, 5), rep(.1, 5))

memory <- rnorm(35, 5, 1)

mydata <- as.data.frame(cbind(disk, memory))

model <- lm(memory ~ disk, data=mydata)

###residual plot

par(mfrow=c(2,2)) #better ordering of graphs

plot(model, which = 1:4)

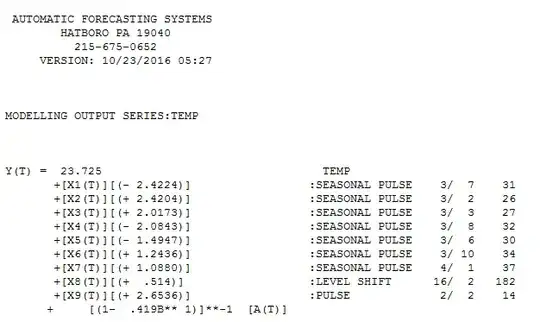

###Order data by largest residual

residuals <- model$residuals

mydata2 <- as.data.frame(cbind(disk, memory, model$residuals))

colnames(mydata2) <- c("disk", "memory", "residuals")

mydata2[order(-residuals),]

Here is the residual plot, qqplot, cooks distance and scale-location.

And here is the output after sorting the data based on descending residual value (so the top values are the largest outliers.

If you're looking for specific statistics, I would consider using Shapiro-Wilk for normality of the distribution, or the outlierTest() function from the car package.