If you truly have a smooth function with noise there are many ways to fit it. Parametric models linear and nonlinear are possibilities, but based on how you ask the question it sounds like you are looking for some sort of a nonparametric regression. I think splines and loess are options. In the end you are searching for a minimum though. For parametric models local and global minima can be estimated and confidence intervals constructed for it. In the nonparametric situation Bill Huber described a procedure in his answer to a similar question on CV involving time series data. I think the idea can apply to your problem as well.

But the way you pose the problem is strange and contradictory. If you have a model for multivariate data with noise without having a way to perfectly filter out the noise you cannot know exactly where the minimum is. No matter what fitting technique you use you can only approximate the point where the minimum occurs. If your estimate is 5.8 the confidence interval could in a certain statistical sense rule out 5 given sufficient data but it could never prove that the minimum is at 5 and your data really seems to be telling you otherwise. Maybe there is an assumption you are making that you haven't told us about.

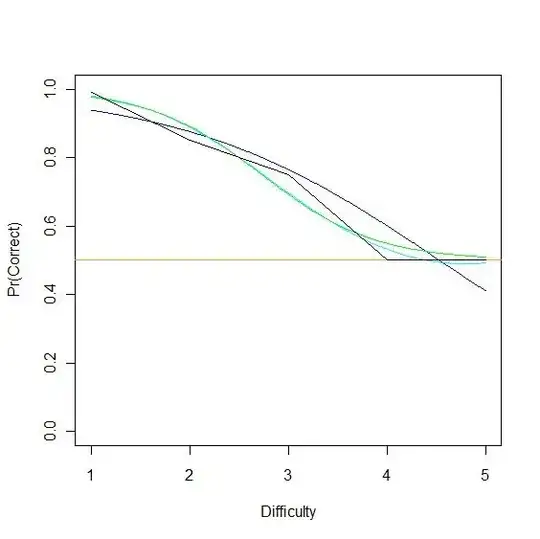

Now that the picture is clear and you have edited your question,

I see that since the data is simulated you know the truth. The true model is a quadratic function that has a U shape and hence has a unique global minimum at 5. Even a statistical technique that assumes a quadratic model with that much noise will give you an estimate different from 5. Nonparametric models that make less restrictive assumptions will probably do worse.

For real data as I said you can't perfectly separate the signal from the noise and you will never get an 'exact right" answer. There may be models that can estimate the minimum and be consistent in a probabilistic sense but not perfect for any finite sample size.