This is kind of an odd thought I had while reviewing some old statistics and for some reason I can't seem to think of the answer.

A continuous PDF tells us the density of observing values in any given range. Namely, if $X \sim N(\mu,\sigma^2)$, for example, then the probability that a realization falls between $a$ and $b$ is simply $\int_a^{b}\phi(x)dx$ where $\phi$ is the density of the standard normal.

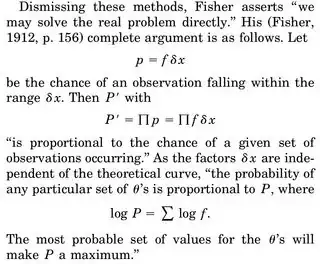

When we think about doing an MLE estimate of a parameter, say of $\mu$, we write the joint density of, say $N$, random variables $X_1 .. X_N$ and differentiate the log-likelihood wrt to $\mu$, set equal to 0 and solve for $\mu$. The interpretation often given is "given the data, which parameter makes this density function most plausible".

The part that is bugging me is this: we have a density of $N$ r.v., and the probability that we get a particular realization, say our sample, is exactly 0. Why does it even make sense to maximize the joint density given our data (since again the probability of observing our actual sample is exactly 0)?

The only rationalization I could come up with is that we want to make the PDF is peaked as possible around our observed sample so that the integral in the region (and therefore probability of observing stuff in this region) is highest.