Although a number of similar questions (some of them duplicates) have been asked around the interpretation of the coefficients from a beta regression, these seem to be focused on models that have used the logit link, but I am yet to find one focused on the log-log link, and I do not know if the interpretation is the same.

I have two questions ...

1.I have posted previously about computing the regression equation from a betareg model when using the log-log link which has been answered, and now I would like to understand how to interpret the coefficients. As stated in my previous question, I am familiar with interpreting the outputs from multiple regression models, which take the following form.

Assuming all other factors are held constant, a one unit increase in x is associated with an increase/decrease in y.

I would like to understand how I take the coefficients from the beta regression output using the log-log link and get to a similar outcome phrase - if such a simple phrase is possible. I have posted the example output below that I used in my previous question.

Call:

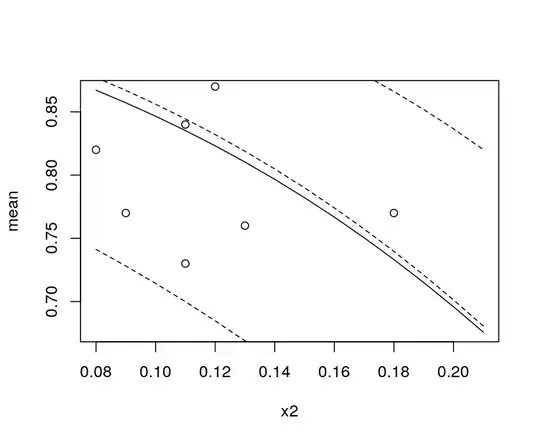

betareg(formula = y ~ x1 + x2, link = "loglog")

Standardized weighted residuals 2:

Min 1Q Median 3Q Max

-1.4901 -0.8370 -0.2718 0.2740 2.6258

Coefficients (mean model with loglog link):

Estimate Std. Error z value Pr(>|z|)

(Intercept) 1.234 1.162 1.062 0.2882

x1 31.814 26.715 1.191 0.2337

x2 -7.776 3.276 -2.373 0.0176 *

Phi coefficients (precision model with identity link):

Estimate Std. Error z value Pr(>|z|)

(phi) 24.39 10.83 2.252 0.0243 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Type of estimator: ML (maximum likelihood)

Log-likelihood: 12.06 on 4 Df

Pseudo R-squared: 0.2956

Number of iterations: 232 (BFGS) + 12 (Fisher scoring)

- In multiple regression, it is possible to understand the influence of each coefficient on the model, by considering the size of the standardised coefficient. Is it possible to get a similar insight based on the outcome of the beta regression?

I would appreciate any advice.