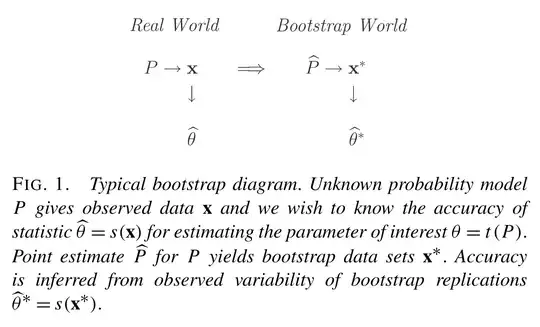

The bootstrap estimates bias by applying the "plug-in" principle to $$E(\hat{\theta}_n) - \theta$$

I got this knowledge from p.124 of Efron, Tibshirani, 1994.

equation(10.1) $\text{bias}_F=E_F[s(\mathbf{x})] -t(F)$ and equation(10.2) $\text{bias}_\hat{F} = E_\hat{F}[s(\mathbf{x}^*)] - t(\hat{F})$

the "plug-in" of the first term has definitive meaning, since $\hat\theta_n$ is a prescribed statistics on the sample. We simply need to take the expectation of it with respect to the empirical distribution.

the "plug-in" of the second term however is rather confusing. Since there are an infinite number of ways to write a given distribution parameter as a functional of the distribution. For example, take $\theta$ to be the decay rate parameter of the exponential distribution, thus $f(X) = \theta e^{-\theta X}$, and one could get $\theta = 1/E(X)$ as well as $\theta = 1/\sqrt{D^2(X)}$, these are different functionals of $f(X)$ and would lead to different plug-in estimate of $\theta$ on finite sample.

This might be a naive question but I hope I've clarified my confusion.

Since I didn't get an answer after half a year, I though maybe I mis-stated the question, So I'll restate it: What's the definition of a "parameter" as repeatedly used in Efron's book? Is it a functional by definition? Or is there a standardized way to write every "parameter" as a functional? Can you give some more examples of a "parameter" (other than the "mean" and "variance")?

Quoted from page 124 of "A introduction to the bootstrap":

... . We want to estimate a real-valued parameter $\theta = t(F)$. For now we will take the estimator to be any statistic $\hat{\theta}=s(x)$