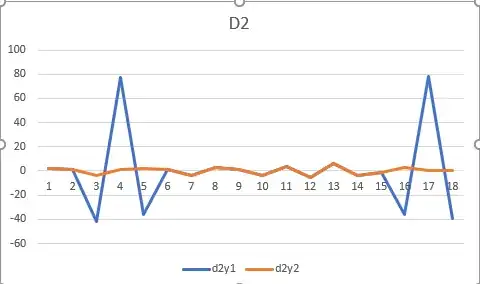

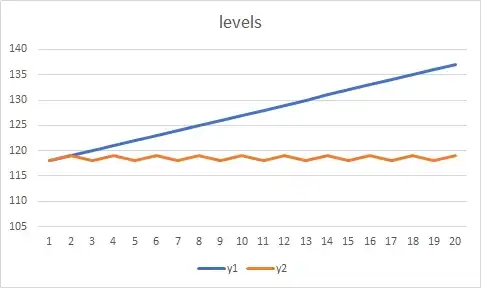

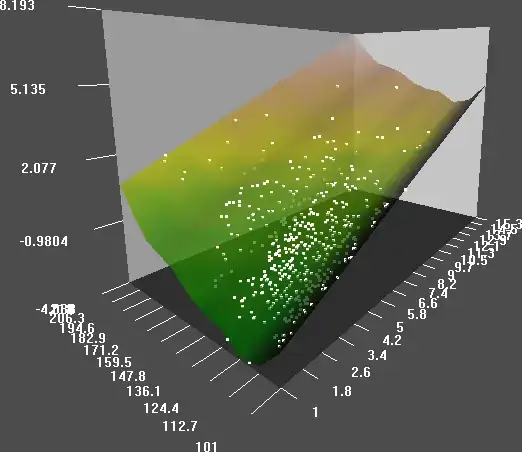

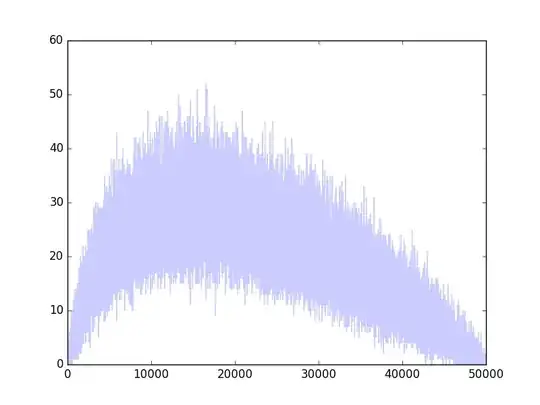

I have an image that has artefacts which I am using a specific process to remove. I want to show that the new image is improved by that process. To compare the two images I am using data from a specific row of the image.

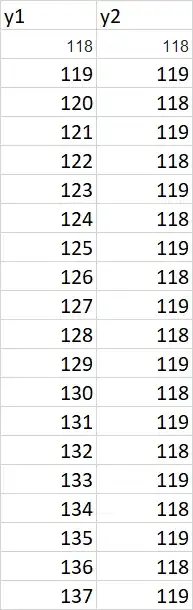

This would be the data (intensity of one specific row) before the change:

y1=[118 117 118 120 80 117 118 120 118 119 121 119 121 118 121 120 118 80 120 121]

and the data after the process:

y2=[118 117 118 120 118 117 118 120 118 119 121 119 121 118 121 120 118 119 120 121]

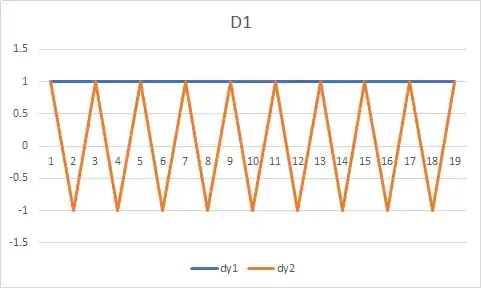

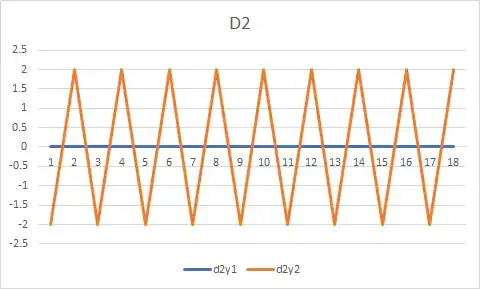

The above data are not all the data (there are tens of thousands of data points, this is just an example). I know that there are ways to show that the two datasets are different but what I want to show is that the second dataset is now more smooth, therefore the new image has been improved.

Is anyone aware of such a metric?