I have a little project for which I have to estimate parameters on a PSF (Point Spread Function = response of the system to a dirac, i.e a star in my case).

I have the 6 parameters to estimate : $p=(\theta=[a,b]$, $\nu=[r_0,c_0,\alpha,\beta])$ :

$$\text{PSF}(r,c) = \bigg(1 + \dfrac{r^2 + c^2}{\alpha^2}\bigg)^{-\beta}$$

and the modeling :

$$d(r,c) = a \cdot \text {PSF}_{\alpha,\beta} (r-r_0,c-c_0) + b + \epsilon (r, c)$$

with $\epsilon$ being a white gaussian noise.

This is the matrix form :

$$\begin{bmatrix}d(1,1) \\ d(1,2) \\ d(1,3) \\ \vdots \\ d(20,20) \end{bmatrix} = \begin{bmatrix} \text{PSF}_{\alpha,\beta}(1-r_0,1-c_0) & 1 \\ \text{PSF}_{\alpha,\beta}(1-r_0,2-c_0) & 1 \\ \text{PSF}_{\alpha,\beta}(1-r_0,3-c_0) & 1 \\ \vdots & \vdots \\ \text{PSF}_{\alpha,\beta}(20-r_0,20-c_0) & 1 \end{bmatrix} \times \begin{bmatrix}a \\ b \end{bmatrix} + \begin{bmatrix}\epsilon(1,1) \\ \epsilon(1,2) \\ \epsilon(1,3) \\ \vdots \\ \epsilon(20,20) \end{bmatrix}\\$$

So we can write for the 1D data vector "d" :

$$d=H(\nu)\,\theta + \epsilon$$ with $H$ the matrix defined above.

Our Teacher told us to start from a posterior distribution $f(p|d)$ (from what I have understood, the density function to get $p$ parameters knowing the data).

I have to give this expression and its logarithmic form $\text{log(f(p|d)}$ as a function of cost function $J_{\theta,\nu}$ (I have already implement this cost function but for Maximum Likelihood and after, I used "fminsearch" Matlab function to find best parameters).

Question 1) Someone could help me to find the expression of this posterior ditribution $f(p|d)$ in my case ?

Question 2) I have to use the Metropolis Hastings algorithm to find the estimations of the 6 parameters above ( $p=\theta=[a,b]$, $\nu=[r_0,c_0,\alpha,\beta]$)

I know this algorithm can generate samples following distributions but how to make the link with the estimations of parameters contained into $d$ parameters vector ($p=[a,b,r_0,c_0,\alpha,\beta]$ ?

Feel free to ask me further informations if you don't understand my concrete example. Technically, it doesn't seem to be very complex but I am a beginner into estimations parameters with MCMC methods.

Any help or suggestion is welcome.

UPDATE 1 :

Question 1) I have found the expression of $f(p|d)$ :

$$f(p|d) = \dfrac{f(p)\,f(d|p)}{\int_{p}f(d|p)\,\text{d}p}$$

We can also write :

$$f(p|d) \propto \text{Likelihood(d|p)}\,f(p)\quad(1)$$

with $f(p)$ the prior function (that I can take as uniform distribution).

Here's an example of code (R language) from this link with the estiation of a parameter $\theta$ :

x <- rexp(100)

N <- 10000

theta <- rep(0,N)

theta[1] <- cur_theta <- 1 # Starting value

for (i in 1:N) {

prop_theta <- runif(1,0,5) # "Independence" sampler

alpha <- exp(sum(dexp(x,prop_theta,log=TRUE)) - sum(dexp(x,cur_theta,log=TRUE)))

if (runif(1) < alpha) cur_theta <- prop_theta

theta[i] <- cur_theta

}

hist(theta)

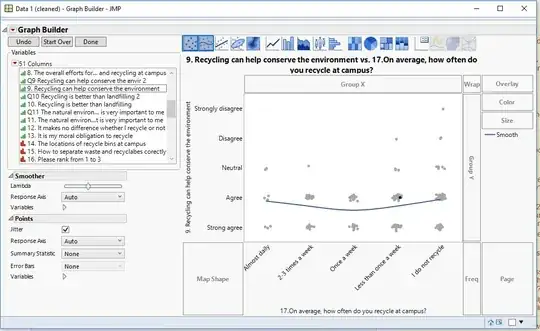

and the histogram gives the best estimation of parameter $\theta$ :

From what I have found on stackexchange forums : I can take a uniform prior density for $f(p)$ and After, I can do sampling following the distribution $f(d|p)$.

If I take the product $f(d|p)\times f(p)$ and once $p$ is given, I plot the histogram for a large number of parameters generated $p$ (correspondig to $f(d|p$), we can see the peak on histogram which will indicate me the best parameter which fits the data $d$ : is the method correct ?

Last question : Why does our teacher asked us to use the cost function used in previous Likelihood method used whith "fminsearch" ?

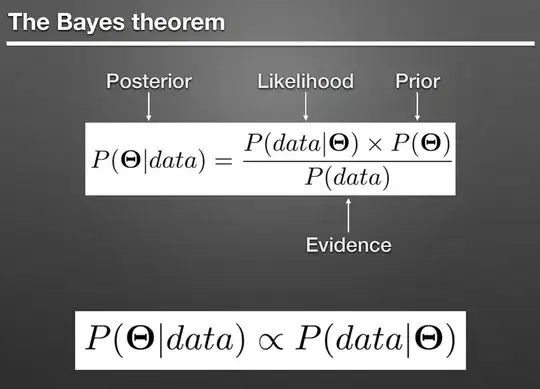

UPDATE 2: Maybe I did confusions in the expression of posterior probability. Indeed, concerning the Bayes theorem, I saw this :

1) Is $p(data)$ a normalization factor ? (is it taken as $p(data)=1$ ?)

2) Is $p(p)=p(\theta)$ always taken as uniform distribution (maybe to simplify the expression of $p(p|d)$) ?

3) How to justify that we can chose a likelihood for $p(d|p)$ ? and morever, from what I have seen, it is often chosen as a gaussian likelihood, isn't it ?

UPDATE 3: Are my questions bad formulated ? since I don't manage to get answers/advices. I hope that someone will help me.

Regards