I am working on a machine learning project when I realized I add a question. This is not programming, nor statistic, nor a probability question, but a real pure mathematical question. So I think my question deserves a place on this site.

EXPLANATION :

My current project is a time series forecasting problem which has as purpose working on the price movement. Actually, the prediction are correct and I think we are still having some place for improvements. To deal with that question, we work with Deep Neural Network. Particularly, it is a regression problem where we use LSTM (i.e. a type of neural network). So we need features and a target. For some good reasons, we don't want to work on the price directly as target, but on the log-return instead, i.e. $\log(\frac{P_t}{P_{t-1}})$ where $P_t$, $P_{t-1}$ are two consecutive prices.

For good reasons, we decided to apply the log-return on the price as well as on the features. For the sake of that question, let's define $\log(\frac{F_t}{F_{t-1}})$ where $F_t$, $F_{t-1}$ are two consecutive features.

As there's quite a lot of stability in the features and the price, then the model tends to predict something near zero. Therefore, I would like quietly to remove useless, irrelevant data. Let me describe my needs ...

Suppose a set of consecutive times $\{t_1, t_2, ..., t_n\}$ where the log-returns $LR_{t_1} = LR_{t_2} = ... = LR_{t_n} = 0$. Also, for each time $t_i$, for $i \in \{1, ..., n\}$, lets define the set of normalized (i.e. each features has a value between 0 and 1) features $\{F^i_1, ..., F^i_m\}$.

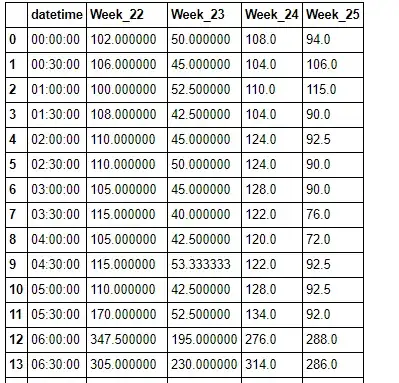

Now lets consider the following matrix of normalized features for the predefined times $\{t_1, t_2, ..., t_n\}$

$\quad \begin{pmatrix} F^1_1 & F^2_1 & \ldots & F^i_1 & F^j_1 & \ldots & F^n_1 \\ F^1_2 & F^2_2 & \ldots & F^i_2 & F^j_2 & \ldots & F^n_2 \\ \vdots & \vdots & \ldots & \vdots & \vdots & \ldots & \vdots \\ F^1_m & F^2_m & \ldots & F^i_m & F^j_m & \ldots & F^n_m \\ \end{pmatrix} \quad$

As $F^i$ and $F^j$ are consecutive, if it is trivial that if we have $F^i = \{F^i_1, ..., F^i_m\} = \{F^j_1, ..., F^j_m\} = F^j$, then we can remove without loss of generality the $P_{t_i}$ and the associated features $\{F^i_1, ..., F^i_m\}$.

Now, I would like to extend removing prices and the associated features when we have similarities in the features. I have thought using the entropy or the mean square error (MSE) to define a measure of similarity, but it is at this point a need a bit of help.

A try : If I use MSE, we can predefined an $\epsilon$ so that if $\mid F^i - F^j \mid \leq \epsilon$, then we remove the price and the associated features for time $t_i$. Another idea would be to work with the standard deviation on a confidence interval.

QUESTION :

I am curious to find a method to measure the similarity in the features for two or more consecutive times. Any Idea? If my method is great (I doubt!!), how can I set the right $\epsilon$?

UPDATE

Why did I decided to use the log-returns and not prices to model financial data in time series analysis?

Basically, prices usually have a unit root, while returns can be assumed to be stationary. This is also called order of integration, a unit root means integrated of order $1$, $I(1)$, while stationary is order $0$, $I(0)$. Time series that are stationary have a lot of convenient properties for analysis. When a time series is non-stationary, then that means the moments will change over time. For instance, for prices, the mean and variance would both depend on the previous period's price. Taking the percent change (or log difference), more often than not, removes this effect.