I am trying to understand the transformer model from Attention is all you need, following the annotated transformer.

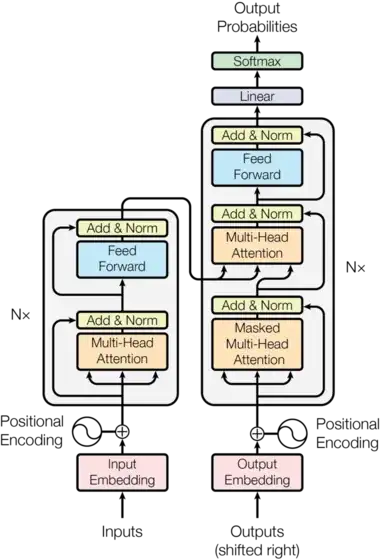

The architecture looks like this:

Everything is essentially clear, save for the output embedding on the bottom right. While training, I understand that one can use the actual target as input - all one needs is to

- shift the target by one position to the right

- use a mask to prevent using - say - the $n+k$-th word from the output to learn the $n$-th one

What is not clear to me is how to use the model at inference time. When doing inference, one of course does not have the output - what goes there?