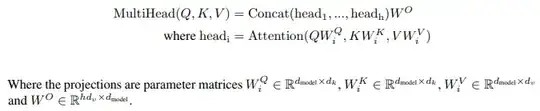

I have been trying to understand the transformer network and specifically the multi-head attention bit. So, as I understand it that multiple attention weighted linear combination of the input features are calculated.

My question is what stops the network from learning the same weights or linear combination for each of these heads i.e. basically making the multiple head bit redundant. Can that happen? I am guessing it has to happen for example in the trivial case where the translation only depends on the word in the current position?

I also wonder if we actually use the full input vector for each of the heads. So, imagine my input vector is of length 256 and I am using 8 heads. Would I divide my input into $256 / 8 = 32$ length vectors and perform attention on each of these and concatenate the results or do I use the full vector for each of these and then combine the results?