Putting aside the pros and cons of Extreme Learning Machines (ELMs), there is something pretty fundamental that I don't understand: why are the weights and biases in the hidden layer randomised?

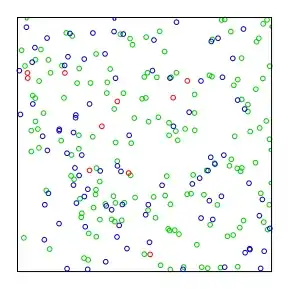

Would it not be better to thoroughly span the weight-bias space with ordered values so that we can be sure that all of the space has been spanned? Surely randomised weights and biases might leave regions of the space sparsely populated and thus compromise the outcome, i.e. you don't have a node in a region where it would be beneficial to have one?

Compare the two figures at the top-right of the Sobol sequence Wikipedia page, for instance. The pseudorandom sequence produces a lot of empty regions in comparison with the Sobol sequence.

Sobol Sequence

Images by Jheald [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], from Wikimedia Commons