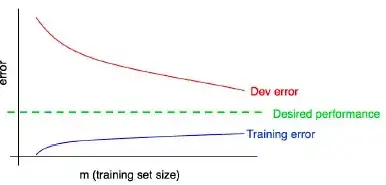

I'm really puzzled... I’ve learned and observed that training loss / error increases with training data size as stated in Dr Andrew Ng’s ML course.

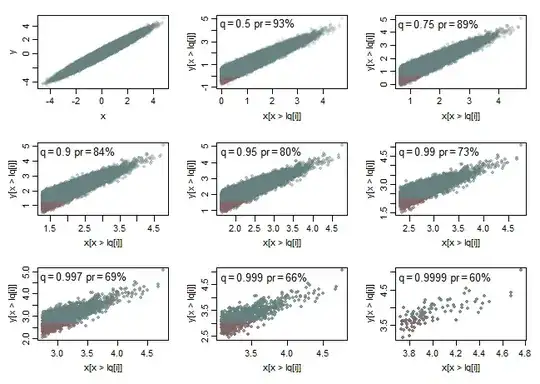

I’ve recently experienced an anomaly. Training loss and validation loss curves were decreasing while training data size was increasing. The code was plotting after all the training was entirely done. In fact, I’ve trained 10 different DNN models with increasing quantity of data.

I suspect that Dr Ng’s drawing assumes that the number of data points is already pretty large. The drawing does not show the training error curve for small training sets. Maybe in that area, for high capacity DNN both training loss and validation loss can decrease together as the training data size increases. Maybe, maybe not... I don't really know...

That said, I’ve got the Dr Ng’s described behavior with small classical machine learning models using the same code but different data for both experiments, I just have to replace the model.