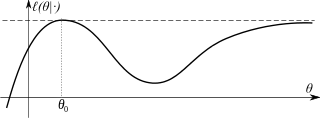

One additional example of non-uniqueness of MLE estimator:

To estimate the location parameter $\mu$ of the Laplace distribution through ML, you need a value $\hat{\mu}$ such that:

$$ \sum_{i=1}^n \frac{|x_i - \hat{\mu}|}{x_i - \hat{\mu}} = 0$$

That is, an estimate $\hat{\mu}$ such that the number of observations below and above $\hat{\mu}$ are equal.

Clearly for an even $n > 1$ the solution will not be unique, unless the the two central observations (in ascending order) are the same.

For the sake of simplicity, usually we choose as estimate $\hat{\mu} = \overset{\sim}{x}$ (sample median), because it satisfies the required condition and is a well known statistic, but it might not be the unique answer.

This is troublesome when you're using numerical algorithms that might not converge because there's not a single answer.