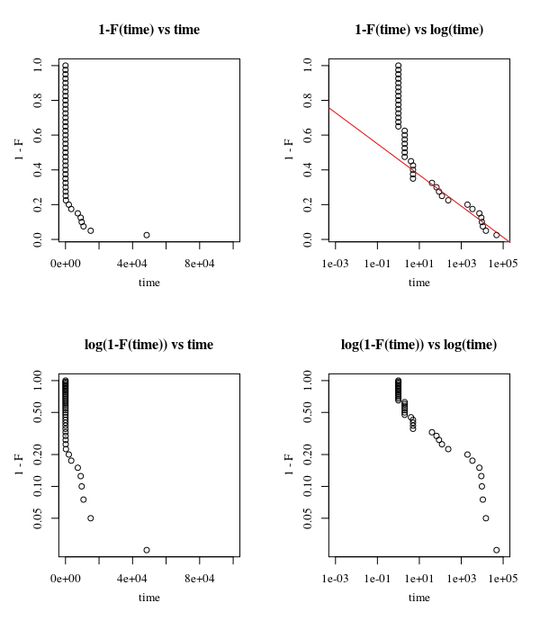

I am trying to model a type of event that happens (once) at an unknown time.

I would like to know: given a certain average event time, what is the probability that the event will happen within a certain time period?

I think this would be similar to a Poisson Distribution, but unlike in the Poisson Distribution, it can only happen once. I am not looking for the number of events but for the time until an event (that only occurs once) occurs.

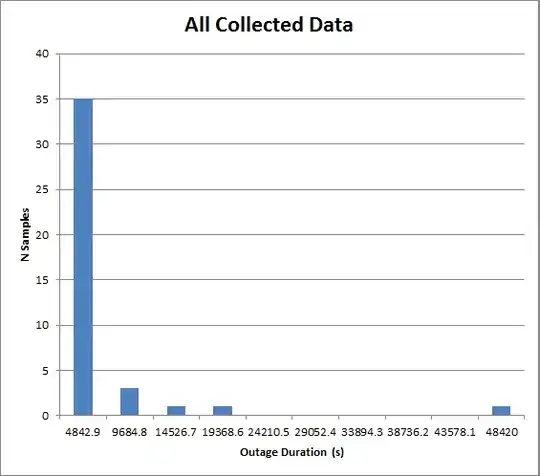

This is being used to model restoration times in an electrical network, and the model will feed into a Monte Carlo simulation. The data is very heavily skewed. A histogram is shown here:

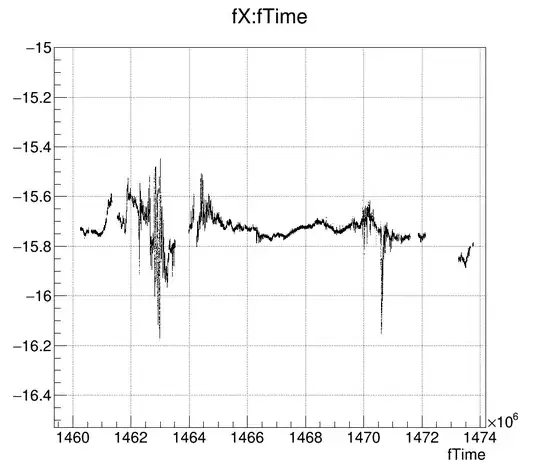

And here is a plot showing data that is shorter than 10% of the longest data point...

Raw data (in seconds):

[5, 1980, 5, 2, 5, 2, 5, 240, 66, 120, 9660, 3420, 10740, 48420, 87, 2, 2, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 1, 9065, 40, 1, 1, 1, 2, 1, 4, 15029, 7332, 2]