In this question, I would like to ask two things:

- outlier detection

- normality test

Details are as follows:

I need to detect and remove outliers in my data. Before doing that, I want to test if my data is normally distributed or not. I have two variables X(independent) and Y(dependent) and have 951 records for both of them.

I want to know that while testing the normality, do I need consider both the variables simultaneously or both the variables but one at a time? (Somewhere, I have read that only dependent variable is considered to test the normality).

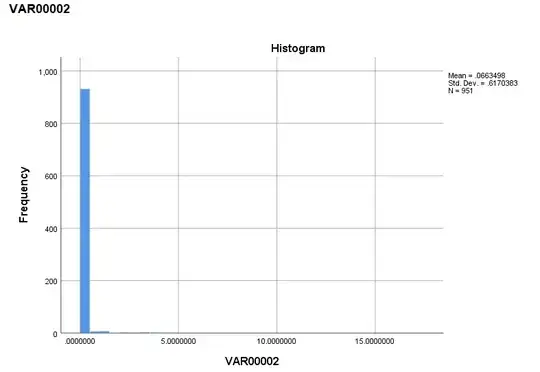

The attached figures show the results of normality test (Analyse>>Descriptive >>Explore) of dependent variable. If normality test is done only on dependent variable, then it shows that the data is highly skewed. In such a case, how can I remove the outliers?

The significance level of Shapiro-Wilk test and Kolmogorov-Smirnov test is 0.00. Skewness has statistic of 22.909 with SE of 0.079.