I seem to be missing some vital piece of information. I am aware that the coefficient of logistic regression are in log(odds), called the logit scale. Therefore to interpret them, exp(coef) is taken and yields OR, the odds ratio.

If $\beta_1 = 0.012$ the interpretation is as follows: For one unit increase in the covariate $X_1$, the log odds ratio is 0.012 - which does not provide meaningful information as it is.

Exponentiation yields that for one unit increase in the covariate $X_1$, the odds ratio is 1.012 ($\exp(0.012)=1.012$), or $Y=1$ is 1.012 more likely than $Y=0$.

But I would like to express the coefficient as percentage. According to Gelman and Hill in Data Analysis Using Regression and Multilevel/Hierarchical Models, pg 111:

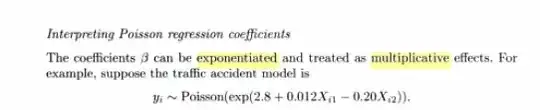

The coefficients β can be exponentiated and treated as multiplicative effects."

Such that if β1=0.012, then "the expected multiplicative increase is exp(0.012)=1.012, or a 1.2% positive difference ...

However, according to my scripts

$$\text{ODDS} = \frac{p}{1-p} $$

and the inverse logit formula states

$$ P=\frac{OR}{1+OR}=\frac{1.012}{2.012}= 0.502$$

Which i am tempted to interpret as if the covariate increases by one unit the probability of Y=1 increases by 50% - which I assume is wrong, but I do not understand why.

How can logit coefficients be interpreted in terms of probabilities?