we have about 50000 models of mobile phone (like Galaxy S7, iPhone 9) in database and the size of data is about 3 million.

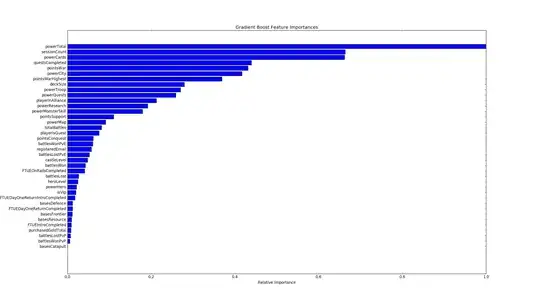

We want to find the mobile phones that have the least call success rate ( the numbers of successful call divided by total call). We want to run a Regression model to find the impact of mobile on call success rate, so each mobile phone which has smallest coefficient, is the worst mobile phones. Dependent variable is call success rate and the independent variables are mobile phones, numbers of subscriber and type of cell cites (cell tower). we use dummy variable for mobile phones, because mobile phones are nominal, So there are 49999 dummy variable in model.

Dummy variables are too many. what is your suggestion?

Is regression a good model? Does anyone know others statistical model to solve this problem?

I attached a picture that shows sample of data and regression model