Here is my model :

model = Sequential()

model.add(LSTM(4, input_shape=(look_back, trainX.shape[2])))

model.add(Dense(1))

model.compile(loss='binary_crossentropy', optimizer='adam',metrics=['accuracy'])

model.fit(trainX, trainY,validation_split=0.3, epochs=100, batch_size=50, verbose=1)

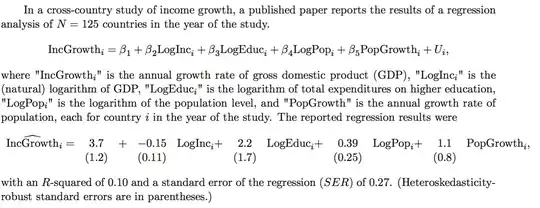

and  is what I get... I see that the accuracy is suddenly falling for some epochs and remains low until the end, while the validation accuracy is quite great.

is what I get... I see that the accuracy is suddenly falling for some epochs and remains low until the end, while the validation accuracy is quite great.

What's wrong ? Is it under/over fitting (I guess no overfitting, otherwise validation score should be low) ? How can I prevent from such a thing a priori (i.e. by initializing a parameter or sth like this). Is reducing the number of epochs would be a solution ?

What is quite strange is that the accuracy first grows and reaches a very high value, and then suddenly falls, with no appearing reason...

Thanks for help