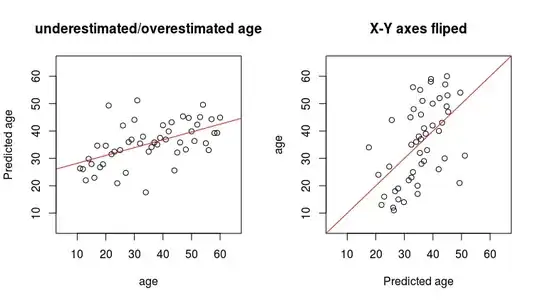

I am trying to predict age from a couple of variables using linear regression, but when I plot predicted age against real age, I can see that small values are significantly overestimated and big values are underestimated. If I flip the axes, so my predicted values are on the X axis, the regression line is as straight as it can be.

set.seed(1)

n = 50

age <- 1:n+10

variable1 <- age + 100 + rnorm(n, 0, 45)

variable2 <- age + 100 + rnorm(n, 0, 45)

variable3 <- age + 100 + rnorm(n, 0, 45)

variable4 <- age + 100 + rnorm(n, 0, 45)

data <- as.data.frame(cbind(age, variable1,

variable2, variable3, variable4))

fit_all <- lm(age ~ ., data=data)

predictions_all <- predict(fit_all, data)

par(mfrow=c(1,2))

plot(predictions_all ~ age, ylab='Predicted age', xlim=c(5,65), ylim=c(5,65), main='underestimated/overestimated age')

abline(coef(lm(predictions_all ~ age)), col='red')

plot(age ~ predictions_all, xlab='Predicted age', xlim=c(5,65), ylim=c(5,65), main='X-Y axes fliped')

abline(coef(lm(age ~ predictions_all)), col='red')

I think I understand what is going on in case of 1 variable, an average 10-year-old sample will have a value of a variable5 around 117, however an average sample with a variable5 of 117 will be around 20 years old, hence the bias.

I still can't get my head around this situation in case of multiple variables and what to do about it. There is a similar question here Why are the residuals in this model so linearly skewed? where the answer is basically don't worry about it and plot residuals instead, however that is not solving my problem of a systematic bias of my predictions, which is what I care about.