I was recently looking for ways to resample time series, in ways that

- Approximately preserve the auto-correlation of long memory processes.

- Preserve the domain of the observations (for instance a resampled times series of integers is still a times series of integers).

- May affect some scales only, if required.

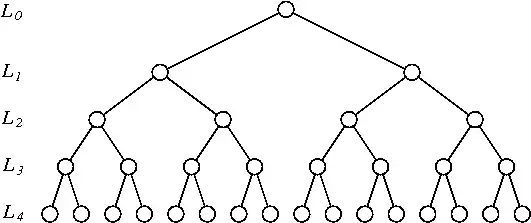

I came up with the following permutation scheme for a time series of length $2^N$:

- Bin the time series by pairs of consecutive observations (there are $2^{N-1}$ such bins). Flip each of them (i.e. index from

1:2to2:1) independently with probability $1/2$. - Bin the obtained time series by consecutive $4$ observations (thre are $2^{N-2}$ such bins). Reverse each of them (i.e. index from

1:2:3:4to4:3:2:1) independelty with probability $1/2$. - Repeat the procedure with bins of size $8$, $16$, ..., $2^{N-1}$ always reversing the bins with probability $1/2$.

This design was purely empirical and I am looking for work that would have already been published on this kind of permutation. I am also open to suggestions for other permutations or resampling schemes.