I made a neural network to do binary classification with medical images. Since I evaluate test accuracy (x% accurate), is there any statistical method which can be used to calculate threshold accuracy(y%) to prove neural network classification accuracy is acceptable since x% > y%.

Since I'm doing a binary classification, google says having more than 50% of accuracy will be acceptable. But I'm dealing with medical images and I need to have higher accuracy for my model. So based on details of images of my two classes, can I calculate a threshold value?

UPDATE

I'll state the complete problem here.

I need to classify several datasets (Say n number of datasets) using m number of classifiers where n>m

About datasets, one datasets has two folders of medical images and one folder with images which can be used and in other folder, images which cannot be used. So my classifier is a binary classifier which identify whether given image can be used or not.

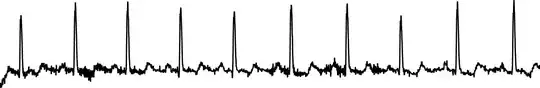

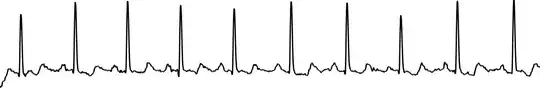

Likewise I have n number of datasets which have similar data but not identical. Example : ECG 3 leads data (3 classes : Lead I is kind of similar to lead 2 but not identical.)

I used my classifier with other datasets (which did not used to train my classifier) to evaluate how well it classifies these datasets. For some datasets I got accuracy higher than 90% and for some lower than 80%. So I thought, if there is a method to calculate a threshold value for the classifier and show since classification accuracy for x dataset with classifier is smaller than threshold value, need to train a classifier for that dataset alone.. This is what I wanted.