Here is a figure illustrating the Fully Convolutionnal Network (FCN) of the Fully Convolutionnal Paper for Semantic Segmentation :

The upsampling layer at the end confuses me. I cannot understand how it learns. I know how it works technically, I understand the "deconvolution/transpose convolution/whatever you want to call it" operation, so I get how you can get a bigger image from a small one.

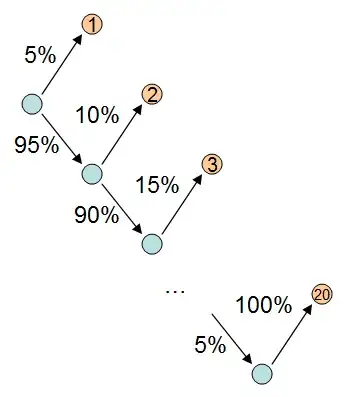

But how does this "deconv layer" learn to upsample images like that, to get the bigger image ? The input it learns from (block marked "21" in the image") is something that can be like a 4*4*21 input (the dimensions are not necessiraly correct here, but for example SegNet 4*4 is what you can end up with after all the convolutions and before the deconvolutions).

How do you grow from a 4*4 an image to the full size output shown ? I feel like too much information was lost in the downsampling process.. What weights can you learn in the upsampling layer that are able to "create" so much information from that 4*4 input ?